What is Canary Testing? A Definitive Guide to Safer Deployments

Canary testing is an important strategy in the world of software testing that every software tester should understand. It is a method where a new feature or update is rolled out to a small subset of users or systems before deploying it to the entire production environment.

The idea behind this approach is to minimize the impact of potential issues by testing the waters with a limited audience. This practice helps teams identify bugs, performance issues, or other problems in real-world conditions, without risking the stability of the entire system.

For software testers, understanding canary testing is crucial because it ensures smoother and safer releases while providing valuable insights into how changes affect users. It is a powerful tool for maintaining quality and reliability in continuous delivery pipelines.

What is Canary Testing?

Canary testing is a method used in software development to gradually roll out new updates or features to a small, specific group of users before releasing it to everyone.

Think of it like sending a “canary” into a mine to check for dangers before the rest of the team goes in. The goal is to monitor how the new changes perform in a real-world environment and identify any issues before a wider release.

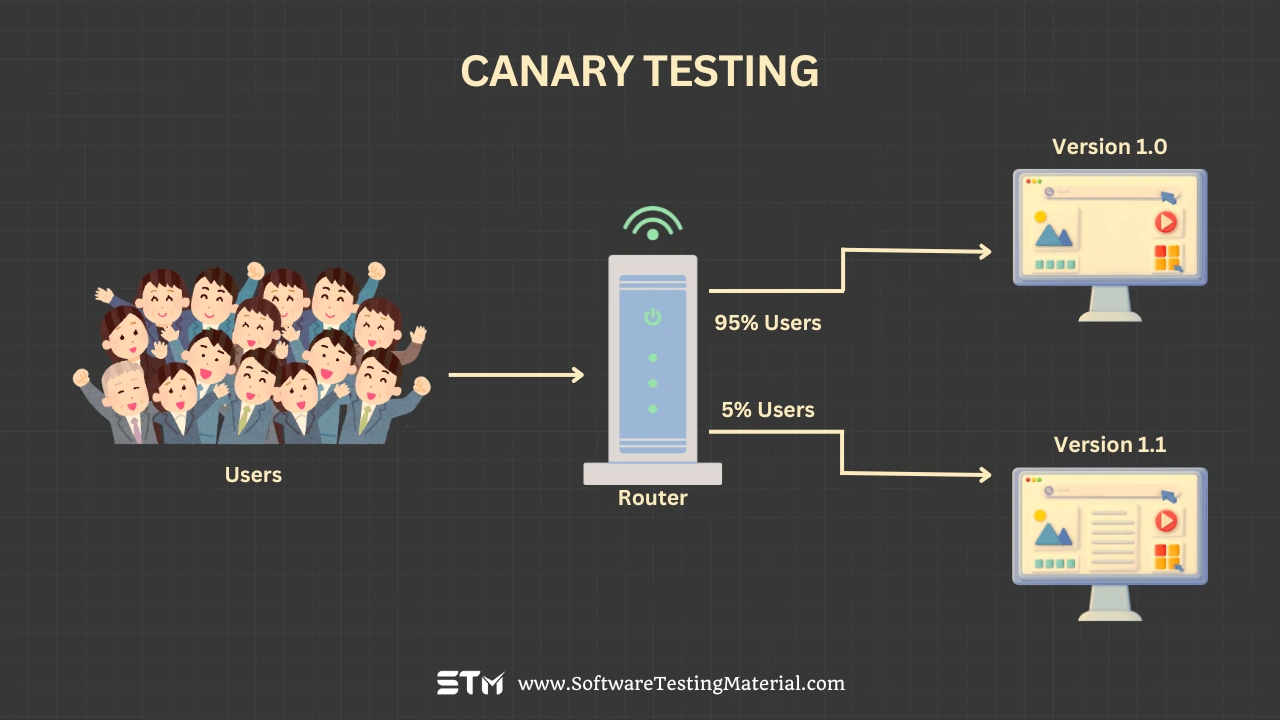

This process typically involves selecting a small percentage of users to receive the update while the rest continue using the current version. These users are monitored closely for performance, errors, and any unusual behavior in the software. Feedback and data from this group help the team evaluate whether the update is stable and working as expected. If problems are detected, developers can address them quickly, reducing the risk to the overall system. Once everything looks good, the update is gradually extended to more users.

Why should you perform Canary Testing?

Canary testing is especially valuable for its proactive approach to quality assurance. It helps to catch problems early before they affect all users. By releasing an update to a small group first, you can observe how the software behaves in real-world conditions. If issues arise, they can be fixed quickly without causing widespread disruption. This approach reduces the risk of deploying a faulty update to everyone.

It also allows you to gather feedback from the initial group of users, which can highlight any unexpected bugs or usability issues. This real-world feedback is invaluable for improving the software.

Additionally, canary testing improves user trust. When you show that updates are carefully tested and issues are addressed quickly, users feel confident in your product. For teams, it saves time and money by avoiding large-scale rollbacks or emergency fixes after a full release. Overall, canary testing is a smart and safe way to ensure high-quality software for all users.

When should you perform Canary Testing?

You should perform canary testing whenever you are planning to release a new feature, update, or change to your application or software. It is especially useful when the updates involve significant changes to the code, infrastructure, or user-facing elements. Canary testing helps to verify that the changes work as expected without impacting a large portion of your users.

The best time to carry out canary testing is right before releasing an update to your entire user base. First, deploy the new version to a small, controlled group of users. This group acts as an early testing ground, helping you identify any unexpected issues or bugs. It is also ideal for testing in production environments where real-world conditions might affect functionality differently compared to testing environments.

Canary testing is highly recommended when dealing with high-risk changes. For example, introducing new payment systems, database updates, or performance optimizations. It allows you to monitor and gather real-time feedback in a controlled way so issues can be fixed promptly without disrupting the user experience for everyone.

Additionally, you should perform canary testing when you want to monitor how users interact with a new feature or change. This can offer valuable insights into user behavior and help you fine-tune the update if necessary. Overall, performing canary testing at the right time minimizes risks and ensures a smoother release process.

How Canary Testing Works?

Canary testing works by releasing a new feature or update to a small subset of real users before rolling it out to everyone. This small group is called the “canary group.” The idea is similar to how miners used canaries to detect harmful gases; it’s a way to carefully test in a controlled environment before full exposure.

First, select a representative group of users who will receive the new feature. This is often done by targeting specific regions, platforms, or a percentage of traffic. The selection process should ensure this group reflects the larger audience to capture meaningful results.

Next, the system monitors how the canary group interacts with the new feature. This involves tracking metrics like performance, error rates, user engagement, and system stability. If problems occur, they are detected early and only impact this small group instead of the entire user base.

During this phase, teams analyze the feedback and logs collected from the canary group. If all looks good and no major issues are found, the feature can then be gradually rolled out to more users. This incremental approach ensures that there’s another opportunity to catch unforeseen problems before a full release.

If issues do arise during canary testing, the update can be rolled back or fixed for only the subset of users. This limits the risks of widespread outages or negative user experiences.

By following these steps, canary testing allows teams to deliver updates confidently while maintaining a high level of system reliability and user satisfaction.

How to Perform Canary Testing?

Canary testing involves releasing a new update or change to a small subset of users before rolling it out to a larger audience. Here are the steps to perform canary testing in detail:

- Define Goals and Metrics: Start by identifying the purpose of the test and the metrics that will measure its success. For example, you might track user engagement, error rates, or system performance. Clear goals ensure you know what to monitor and evaluate during the test.

- Prepare the Environment: Set up a testing environment that mirrors the production environment. This ensures that the results you gather are accurate and reflect what will happen when the change is fully deployed.

- Select the Canary Users: Choose a small, representative group of users for the initial rollout. These users should reflect the diversity of your overall audience to ensure the results are meaningful. It’s important to ensure that these users are informed or are part of a controlled testing group.

- Deploy the Update: Roll out the update to this canary group. Use automated deployment tools if possible to minimize human error and ensure consistency in the rollout process.

- Monitor Performance: Continuously monitor the performance of the update for the selected canary group. Keep an eye on the predefined success metrics, and look for unexpected behaviors, errors, or issues.

- Collect Feedback: Gather user feedback from the canary group to understand how the update impacts their experience. Pair this with the performance data you’ve collected for a complete picture.

- Analyze Results: Compare the results of the canary group with your expectations and baseline metrics from the existing system. Determine if the update performs as intended or if adjustments are needed.

- Decide on the Next Steps: Based on the analysis, decide whether to proceed with a wider rollout, make additional fixes, or roll back the update for the canary group. If the test is successful, you can expand the update to more users in stages.

- Iterate and Optimize: If issues are identified, resolve them and repeat the canary testing process until the update meets your standards. This iterative approach helps you refine the update for maximum reliability and performance.

By following these steps, you ensure that changes are thoroughly vetted before reaching all users, reducing the likelihood of major problems and building confidence in your deployment process.

Goals of Canary Testing

Following are the goals of Canary Testing.

- Minimize Risk: Canary testing helps reduce the risk of widespread issues by limiting the number of users who receive the update initially. Any problems identified during this phase can be addressed before the update is deployed on a larger scale.

- Detect Issues Early: By deploying changes to a small, controlled group of users, you can spot unexpected bugs, performance issues, or compatibility problems before they affect the majority of users.

- Ensure Stability: The goal is to confirm that the update maintains system stability under real-world conditions, ensuring that key functionalities are not disrupted.

- Gather Real-World Feedback: Canary testing allows you to gather feedback from actual users in a live environment. This helps you understand how the update performs and aligns with user expectations.

- Validate Performance Metrics: Monitor the system for key performance indicators, such as response time and resource usage, to ensure that the update does not negatively impact overall performance.

- Improve Rollout Confidence: Successful canary testing builds confidence in the quality and reliability of the update, making the final rollout to all users smoother and less risky.

- Adapt Quickly: If issues arise, canary testing provides the opportunity to address them and refine the update without impacting the entire user base, helping you remain agile in your deployment process.

Benefits of Canary Testing

Following are the benefits of Canary Testing.

- Early Detection of Issues: Canary testing helps identify bugs or problems in the update early, allowing them to be fixed before the update is fully released to all users.

- Minimized Risk: By testing on a small group of users first, canary testing reduces the risk of a widespread problem affecting the entire user base.

- Improved Stability: The feedback gathered during canary testing ensures the update is more stable and reliable when it is rolled out to everyone.

- Faster Problem Resolution: With detailed data from a smaller user group, developers can quickly investigate and resolve issues, speeding up the refinement process.

- Reduced User Impact: By limiting issues to a smaller group, canary testing ensures most users experience fewer disruptions or failures during updates.

- Real-World Testing Environment: Canary testing provides insights from real-world usage, as users interact with the update in their own environments, revealing issues that may not appear in lab testing.

- Better User Experience: Fixing problems before a full rollout helps maintain user trust and satisfaction, as they experience a polished and well-functioning update.

- Cost Efficiency: Addressing issues in a small test group can save time and resources compared to fixing widespread problems after a full release.

Challenges of Canary Testing

Challenges we face while performing Canary Testing are as follows

- Limited Test Coverage: Canary testing only involves a small group of users, which may not represent the diversity of the entire user base. This can result in missing some issues that might only affect certain demographics or less common use cases.

- Risk of Overlooking Critical Issues: Some critical bugs or performance issues may go unnoticed because they do not appear in the limited environment of the canary group. These issues could emerge when the update is rolled out to the entire user base.

- User Displeasure in Test Group: If the canary version has significant flaws, users in the test group may experience frustration, leading to dissatisfaction and, in some cases, loss of trust in the product or service.

- Maintenance Complexity: Managing multiple versions of the software during the canary testing phase can be complex and resource-intensive. This may lead to configuration errors or deployment challenges.

- Difficulty in Identifying Root Causes: When the test group encounters issues, it can be challenging to determine whether the problem lies in the software update itself, specific user environments, or network and hardware factors.

- Slower Rollout Process: While canary testing helps ensure quality, it can slow down the overall deployment timeline, especially if multiple iterations of the update are needed to address issues.

- Data Security and Privacy Risks: Sharing updates with a subset of users may inadvertently expose sensitive information or features that are not yet ready for public release, posing risks to security or confidentiality.

These challenges highlight the need for a balanced and well-structured canary testing approach to ensure its benefits outweigh its drawbacks.

How to deploy the Canary Test?

- Define the Objectives: Start by clearly identifying the goals of the canary test. Determine what aspects of the new feature or update you want to evaluate, such as performance, stability, or user experience.

- Prepare the Environment: Set up a separate testing environment where the update or feature can first be deployed safely. This ensures minimal risk to the main system and allows initial adjustments if needed.

- Identify the User Subset: Choose a small, controlled group of users or systems to receive the new update. These users should represent a diverse cross-section of your audience to gather meaningful feedback.

- Release the Update Gradually: Deploy the update to the selected user subset in small increments. Monitor the deployment process closely to ensure there are no technical issues.

- Monitor Key Metrics: Track important performance indicators such as system stability, response times, error rates, and user behavior. Use monitoring tools to gather real-time data to evaluate the impact of the update.

- Collect Feedback: Gather feedback from the subset of users experiencing the update. This may include surveys, app reviews, or direct communication to understand their experience and identify any issues.

- Address Detected Issues: If any critical issues or bugs are identified during monitoring or based on user feedback, take corrective actions immediately. Resolve the problems in the canary release before considering a broader rollout.

- Evaluate Results: Review the results of the canary test by combining system performance data and user feedback. Use this evaluation to decide if the update is ready for the wider audience.

- Scale the Rollout: Once you’re confident in the stability and functionality of the update, proceed to gradually release it to the rest of your users. Continue monitoring metrics during this phase to ensure the broader deployment is successful.

- Document the Process: Keep a detailed log of the canary deployment process, including test results, identified issues, and corrective actions taken. This documentation will be valuable for future deployments and improving the overall testing strategy.

By following these steps, you can effectively deploy a canary test to minimize risks and ensure a smooth experience for your users.

How to Release Canary Test?

Releasing a canary test involves careful planning and a step-by-step approach to ensure that the process runs smoothly and minimizes risks to your users. Below is a detailed guide on how to successfully release a canary test:

- Plan the Canary Release: Start by defining the specific goals of the canary test. Decide what features or changes you want to test and establish measurable metrics to evaluate performance. Identify a small segment of users who will participate in the test. This group should represent your broader user base while remaining small enough to limit potential impact.

- Set Up the Canary Environment: Prepare a dedicated deployment environment that mirrors the production setup. Ensure the new changes and features to be tested are implemented in this environment. Run thorough pre-deployment tests to catch any bugs or performance issues before releasing to real users.

- Deploy to the Canary Group: Gradually roll out the changes to the selected canary group. This can involve releasing to a specific percentage of servers or users (e.g., 5%) based on your traffic management strategy. Automation tools like feature flags or traffic-routing systems can help control the rollout precisely.

- Monitor Performance and Feedback: Continuously monitor the performance of the canary release using the metrics and KPIs you defined earlier. Keep an eye on user behavior, system logs, error rates, and any feedback from the canary group. Respond promptly to any issues that arise.

- Analyze Results: Compare the performance and reliability of the canary version against your expected benchmarks. Use the data to determine if the release meets the success criteria. Look for patterns in the results to identify potential improvements before expanding the release.

- Iterate and Fix Issues: If issues are identified during the canary testing phase, revert the deployment for this group to the stable version. Address the problems in the code, retest in the canary environment, and redeploy to the canary group once resolved.

- Scale the Release Gradually: After the canary test is deemed successful, begin scaling the release to a larger portion of your users. Monitor performance as you increase the rollout percentage, ensuring that the changes remain stable and reliable across a wider user base.

- Finalize the Full Release: Once you are confident in the results and the system’s stability, transition from the canary version to a full production release. Inform all stakeholders, including your teams and users, about the successful deployment.

Releasing a canary test requires patience, precision, and responsiveness. By following these steps, you can safely introduce changes, improve your system’s reliability, and provide a better experience for your users.

Canary Testing vs Canary Deployment vs Canary Release

| Aspect | Canary Testing | Canary Deployment | Canary Release |

|---|---|---|---|

| Definition | A testing approach where a small subset of users is exposed to changes to identify issues and gather feedback. | A gradual rollout of new application features or services to a small portion of users for stability checks. | The act of fully releasing the tested and deployed feature to the entire user base. |

| Purpose | To verify the functionality, performance, and stability of changes before wider exposure. | To ensure the new change integrates well and doesn’t impact existing services negatively. | To make the new features or updates available for all users after validation is complete. |

| Scope | Focuses on testing specific features, changes, or updates with minimal user exposure. | Covers the partial implementation of the new feature within a live environment. | Encompasses all users by completing the distribution of changes or updates. |

| Duration | Short-term, aiming to identify issues quickly. | May last longer than testing, as it examines the real-world performance under controlled conditions. | Permanent, marking the official adoption of a new change. |

| Users | Limited to a small, chosen test group or early adopters. | Exposes a portion of the user base to the update, typically selected randomly or strategically. | Includes the entire user base once deemed suitable. |

| Feedback | Collects data from focused groups to evaluate potential bugs or improvements required. | Feedback gathered is monitored for potential failures at small-scale usage. | Feedback can still be received post-release, but changes are already live for everyone. |

| Risk Level | Low, as it is isolated to a small group ensuring quick rollback if issues occur. | Moderate, as it’s implemented in the live environment but with limited exposure. | Higher, as the release impacts the entire user base. |

| Rollback Capability | Simple to revert due to the controlled nature of the test environment. | Easier to control since it’s not fully deployed to all users. | Complex, requiring more effort if issues arise, as the feature is fully released. |

Canary Testing vs. A/B testing

| Aspect | Canary Testing | A/B Testing |

|---|---|---|

| Definition | A deployment strategy where a new feature or version is gradually rolled out to a subset of users. | A testing method where different versions of a feature are shown to users to measure performance. |

| Purpose | To ensure the stability and reliability of a new release before a full rollout to all users. | To compare and analyze user preferences or behaviors to determine which version performs better. |

| User Groups | Typically involves a smaller group of real users acting as the initial recipients of the new changes. | Divides the user base into multiple groups, often equally, to test different feature variations. |

| Scope of Testing | Focuses on system reliability, performance, and issues in the early release process. | Focuses on user experience, engagement, and conversion rates between multiple versions. |

| Risk | Low risk as issues can be identified and mitigated before reaching all users. | Minimal risk since both variations are tested, but user experience can still be impacted during testing. |

| Rollback Options | Rollback is straightforward, as the new version is only tested on a limited audience. | Direct rollback is less likely since it involves comparing versions rather than rolling back a release. |

| Test Metric | System stability and performance are the primary metrics during testing. | Metrics depend on user engagement, session length, clicks, or conversion rates. |

| Typical Use Case | Releasing a large-scale update, such as a new app version or a major backend feature upgrade. | Optimizing a website layout, button design, or content to improve user engagement and satisfaction. |

| Implementation | Requires separate deployment pipelines and monitoring for the subset of users receiving the update. | Requires integration with analytics tools to collect and compare user behavior and performance metrics. |

Don’t miss: How To Perform A/B Testing

Canary Testing vs Beta Testing

| Aspect | Canary Testing | Beta Testing |

|---|---|---|

| Definition | A testing strategy where a small subset of users are exposed to a new change or feature before rolling it out to the entire audience. | A testing phase where a nearly complete product is released to a specific group of external users to gather feedback. |

| Purpose | To detect critical issues or bugs in a controlled environment with minimal risk to the broader user base. | To gather valuable user perspectives, feedback, and suggestions to improve the product’s usability and functionality. |

| Audience | Typically involves a small subset of the live user base within the actual production environment. | Performed by selected external users or customers who voluntarily participate in testing the product. |

| Environment | Conducted in a live production environment with all real-world factors affecting the testing scope. | Usually tested in a pre-release or controlled beta environment, separate from full production deployment. |

| Duration | Short-term, typically lasting only until issues are identified and resolved, or until the feature proves stable. | Medium- to long-term, lasting weeks or months to gather comprehensive feedback from testers. |

| Feedback Type | Provides quantitative data from system performance, error logs, and user interactions during the limited rollout. | Contributes qualitative feedback, including user opinions, feature suggestions, and bug reports. |

| Primary Focus | Focused on technical stability and performance in real user scenarios. | Focused on overall user experience, functionality, and alignment with user needs. |

| Risk Level | Low-risk approach as only a small number of users are exposed to potential issues. | Moderate risk since there could be unknown bugs or flaws, but the environment is typically limited to beta users. |

| Scalability | Can easily scale up to include more users incrementally after verifying the success of earlier testing cohorts. | Not scalable; the number of beta participants is fixed for the duration of testing. |

| Examples | Rolling out a new feature like a payment system to 5% of users to ensure its reliability before launching it for everyone. | Releasing a beta version of a new gaming app to a group of early adopters for feedback on gameplay and features. |

| When to Use | Ideal for verifying small but critical feature updates or system changes in a live environment with minimal impact on the majority of users. | Best for validating major product releases or large updates requiring extensive consumer feedback before a full-scale launch. |

Don’t miss: How To Perform Beta Testing

Canary Testing vs. Blue-Green Deployment

| Aspect | Canary Testing | Blue-Green Deployment |

|---|---|---|

| Definition | A strategy where new changes are gradually rolled out to a subset of users while monitoring for issues. | A method that involves maintaining two environments (Blue & Green) to switch traffic between them seamlessly. |

| Purpose | To test new features or updates on a small scale before full-scale deployment. | To reduce downtime and risks during deployment by allowing easy rollback to the previous stable version. |

| Implementation | A small percentage of live users are exposed to the new version while the majority continue using the old version. | Two identical environments are used, where the new version is deployed in one (Green) while the old is live (Blue). Traffic is switched to Green once validated. |

| Risk Mitigation | Identifies potential issues on a limited user base without impacting all users. | Allows safe testing and rollback without affecting live traffic or compromising user experience. |

| Rollback Process | Requires disabling the new version for affected users and restoring the previous state for the subset. | Simply switch traffic back to the stable environment (Blue) with immediate effect and minimal disruption. |

| Use Case | Ideal for testing small, incremental updates or features in a live production environment. | Best for major updates or complete overhauls where seamless transitioning with zero downtime is critical. |

| Complexity | Requires configuration for gradual rollout and monitoring of a specific user subset. | Demands maintaining and managing two separate environments simultaneously, which may require more resources. |

| Monitoring and Feedback | Relies heavily on metrics and user feedback from the subset for identifying potential flaws. | Primarily involves monitoring the Green environment and ensuring stability before full traffic switch. |

| Cost | Generally cost-effective as it uses the existing infrastructure for the rollout. | Higher cost due to duplication of infrastructure and maintaining two active environments. |

Frequently Asked Questions

Why is it called Canary Testing?

The term “canary testing” comes from an old practice where miners would take canaries into coal mines to detect harmful gases like carbon monoxide. If the canary showed signs of distress, it was a warning for the miners to evacuate. Similarly, in software testing, a canary test deploys a small change to a limited audience first. If issues are detected, the change can be rolled back before it affects all users.

Is Canary Testing better than Blue-Green testing?

Both canary testing and blue-green testing are useful methods, but they serve different purposes. Canary testing is better when you want to release changes slowly to a small group of users to catch issues early. Blue-green testing is useful for quickly switching between old and new versions, minimizing downtime. The best choice depends on the situation and the goals of the deployment. Sometimes, using both methods together can provide the most effective results.

How do we determine when Canary Testing makes sense?

To determine if canary testing makes sense, consider the impact of the changes you are testing. It is ideal for situations where you want to try out new features or updates on a small group of users to identify issues early without affecting everyone. Canary testing works best when you can monitor user behavior, system performance, and error rates effectively during the test. If the change is low-risk and you can easily roll it back, canary testing is a good choice.

What are the benefits of Canary Release?

Canary release helps catch problems early by testing changes on a small group of users first. It reduces the risk of widespread issues by gradually rolling out updates. This approach ensures better stability and gives real user feedback, helping teams improve the feature or fix issues before a full release. It is also easier to roll back changes if something goes wrong. Overall, canary release makes deployments safer and more reliable.

Can Canary Testing be automated?

Yes, canary testing can be automated. Automation tools and scripts can help monitor the performance and behavior of the new changes in real-time. These tools can detect errors, track metrics, and compare results with expected outcomes. Automation makes the process faster, helps catch issues quickly, and reduces manual effort, making canary testing more efficient and reliable.

How does Canary Testing fit into a DevOps environment?

Canary testing fits perfectly into a DevOps environment because it supports the principles of continuous delivery and deployment. By rolling out changes to a small group of users first, teams can monitor performance and quickly identify issues without affecting the entire system. This approach aligns with the DevOps goal of delivering high-quality updates quickly and reliably, while reducing risks during deployment.

What is a Canary Release?

A canary release is a way to roll out new software or updates to a small group of users before releasing it to everyone. This method helps identify any problems early, so they can be fixed without impacting all users. It is like testing the waters with a small group to ensure everything works as expected before a full launch.

What is a Canary Analysis?

A canary analysis is the process of evaluating the performance, reliability, and behavior of a new software version during a canary release. It involves comparing the data from the small group of users testing the new version with the existing version’s data. This helps detect any issues or risks early, ensuring the update is safe to roll out to all users.

Is Canary Testing suitable for all types of companies?

Canary testing is not suitable for all types of companies. It works best for companies with large user bases and systems that can support gradual rollouts. Smaller companies or those with limited resources may find it harder to implement because it requires infrastructure to monitor and compare live metrics during the test. However, for companies that rely heavily on software reliability, it can be highly beneficial.

Is Canary Testing the same as A/B testing?

No, canary testing is not the same as A/B testing. Canary testing focuses on rolling out a new version of software to a small group of users first to ensure it is stable before releasing it to everyone. A/B testing, on the other hand, compares two versions of a product or feature (version A and version B) to see which performs better. While both involve testing with users, the goals and methods are different.

What’s the difference between a Smoke Test and a Canary Test?

A smoke test is a quick and basic check to verify that the most important features of a software application work properly after a new build. It ensures the build is stable enough for further testing. A canary test, on the other hand, gradually rolls out a new version of the software to a small group of users to monitor its performance and stability before a full release. The key difference is that smoke tests are typically performed in a controlled testing environment, while canary tests happen in a live production environment.

What’s the difference between a Blue-Green Test and a Canary Test?

A blue-green test and a canary test are both methods for releasing software updates, but they work differently. A blue-green test involves having two environments, “blue” and “green.” One is live (blue), and the other is ready with the new version (green). You can switch all users to the green environment once it’s verified. A canary test, on the other hand, releases the new version to a small group of users first. If it performs well, it’s gradually rolled out to everyone. The main difference is that blue-green testing switches the entire environment, while canary testing updates only for a small portion of users at first.

Should You Execute Your Automated Tests Before Canary Testing?

Yes, you should execute your automated tests before canary testing. Automated tests help ensure that the new version of your application is stable and free of critical bugs before it reaches any users. By running these tests first, you can catch major issues early and reduce the risk of introducing errors during the canary testing phase. This step saves time and builds confidence in the release process.

Conclusion

Canary testing is a powerful and efficient technique for releasing new features or updates in a controlled manner, minimizing risks and ensuring a better user experience. By gradually rolling out changes to a small subset of users, teams can monitor performance, gather valuable feedback, and detect any potential issues before a full-scale deployment.

While it requires careful planning and monitoring, the approach is generally cost-effective and integrates well into modern CI/CD pipelines. By leveraging metrics, analytics, and user interactions during the testing phase, teams can make informed decisions about whether to proceed, pause, or roll back the deployment.

Ultimately, canary testing reduces downtime, improves system reliability, and fosters user trust, making it an essential strategy for delivering high-quality software updates in today’s fast-paced development landscape.