Performance Testing: What it is, Why it is important, Types of Performance Tests

Performance testing is an essential part of software development that helps ensure applications run smoothly and efficiently under various conditions. By conducting performance tests, developers can identify and address potential issues such as slow response times, bottlenecks, and resource limitations before they affect end-users.

This type of testing not only improves the user experience but also enhances the overall reliability and scalability of a system.

In this article, we will explore the what is performance testing, why it is important, the best times to conduct performance testing and highlight why it is a crucial step in delivering high-quality software products.

What is Performance Testing?

In software, performance testing (also called Perf Testing) determines or validates the speed, scalability, and/or stability characteristics of the system or application under test. Performance is concerned with achieving response times, throughput, and resource-utilization levels that meet the performance objectives for the project or product.

Web application performance testing is conducted to mitigate the risk of availability, reliability, scalability, responsiveness, stability etc. of a system.

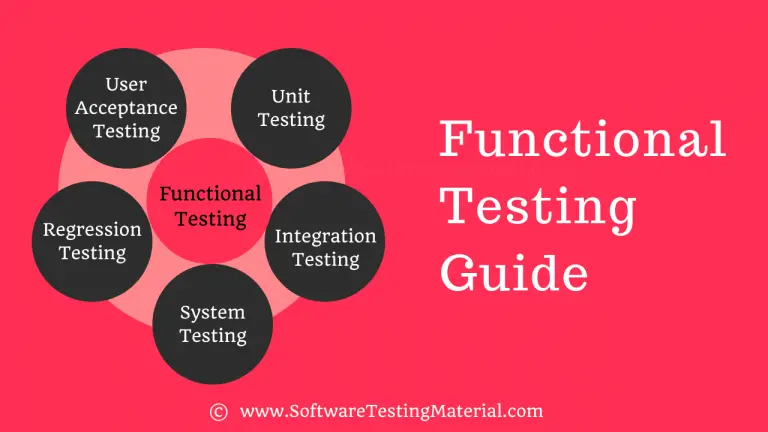

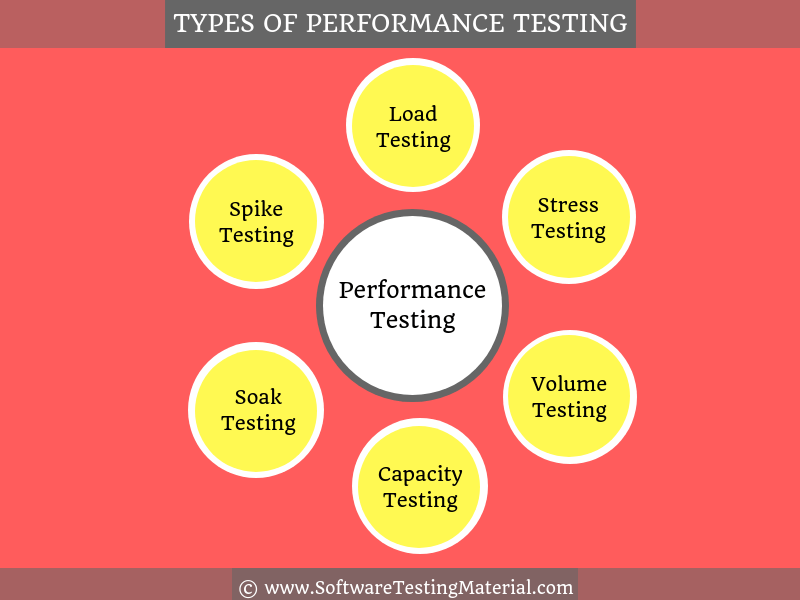

Performance testing is a non-functional software testing technique encompasses a number of different types of testing like load testing, volume testing, stress testing, capacity testing, soak/endurance testing and spike testing each of which is designed to uncover or solve performance problems in a system.

Why is System Performance Testing important?

System performance testing is vital to ensure that applications run smoothly and efficiently under various conditions. In today’s market, the performance and responsiveness of applications are crucial to a business’s success.

We use performance testing tools to measure the performance of a system or application under test (AUT) and help in releasing high-quality software but it is not done to find defects in an application.

- Ensures Application Reliability: Detects performance bottlenecks and ensures the application can handle expected user loads effectively.

- Prevents Downtime: Identifies and mitigates issues that could cause system failures or slowdowns, reducing the risk of downtime.

- Enhances User Experience: Validates that the application meets predefined performance criteria, ensuring a smooth and responsive user experience.

- Identifies Hidden Issues: Reveals underlying problems such as memory leaks, synchronization issues, and network latency that could degrade performance over time.

- Optimizes Resource Utilization: By identifying inefficient memory usage, excessive CPU consumption, and other resource drains, performance testing allows for the optimization of system resources. This not only improves performance but also reduces operational costs.

- Supports Business Goals: Ensures the application performs reliably, thereby supporting business operations and user satisfaction.

- Increasing SEO ranking: Website performance is a significant factor in search engine rankings. Faster, more reliable websites are ranked higher by search engines, which improves visibility and drives more traffic.

- Saves Time and Resources: Early identification of performance issues prevents costly fixes and allows for proactive measures to be taken, preventing similar issues in the future and ensuring long-term stability and performance.

Overall, performance testing is indispensable for delivering high-quality software that meets the needs and expectations of users while also maintaining the operational efficiency and reliability of the system.

When is the right time to conduct performance testing?

The best time to conduct performance testing of a web or mobile application is as early as possible in the software development lifecycle. Ideally, performance testing should start during the development phase when individual components or modules are being developed. This helps in identifying performance issues before they become too expensive to fix. Additionally, performance testing should be carried out before any major release to ensure the system can handle the expected load without any hiccups. Regular performance testing during maintenance is also important to make sure that updates or changes do not negatively impact the system’s performance.

What does performance testing measure?

Performance testing measures several important aspects of an application’s behavior to ensure it can perform well under different conditions. Here are some key factors:

- Load Time: The time it takes for the application to load and become fully functional from the moment a user tries to access it.

- Response Time: How quickly the application responds to a user request. This includes the time taken to process a request and return a response.

- Scalability: How well the application can handle increased load or a growing number of users. This helps determine if the application will continue to perform well as usage scales up.

- Bottlenecks: Points in the application where performance is hindered, slowing down the entire system. Identifying bottlenecks is crucial for improving efficiency and overall performance.

- Concurrent users: Measures how many users the application can handle at one time

Types of Performance Testing?

Performance testing encompasses various methodologies designed to evaluate the robustness and efficiency of an application. By examining different aspects of system behaviour under load, these tests help ensure that applications can meet user expectations and business requirements. Here are some key types of performance testing:

#1. Load Testing

Load Testing is to verify that a system or application can handle the expected number of transactions and to verify its behavior under both normal and peak load conditions (no. of users). To learn more you can check our detailed Load Testing tutorial.

#2. Stress Testing

Stress Testing is to verify the behavior of the system once the load increases more than its design expectations. This testing addresses which components fail first when we stress the system by applying the load beyond the design expectations. It enable us to design a more robust system. To learn more you can check our detailed Stress Testing tutorial.

#3. Spike Testing

Spike Testing is to determine the behavior of the system under a sudden increase of load (a large number of users) on the system. It shows if the system can handle sudden surges in traffic. To learn more you can check our detailed Spike Testing tutorial.

#4. Soak

Soak Testing, also known as Endurance Testing, involves running a system under heavy load for an extended period to identify performance issues. This process ensures that the software can sustain the expected load over a long duration. To learn more you can check our detailed Soak Testing tutorial.

#5. Endurance Testing

Endurance testing, also known as soak testing, checks how the system performs over an extended period. It helps detect issues like memory leaks that can affect the system’s long-term stability. To learn more you can check our detailed Endurance Testing

#6. Scalability Testing

Scalability testing aimed at assessing an application’s ability to scale up or down in response to varying workloads. This process involves systematically increasing the user count, data volume, or transaction loads to determine how well the application can handle growth. The goal is to identify the maximum capacity of the system and pinpoint performance degradation points. By simulating extended workloads and increasing demands, scalability testing helps ensure that the application can maintain its functionality and performance even as usage scales. This type of testing is crucial for future-proofing the system, validating architectural changes, and planning for anticipated growth. To learn more you can check our detailed Scalability Testing tutoriial.

#7. Volume Testing

Volume Testing is to verify whether a system or application can handle a large amount of data. This testing focuses on database. Performance tester who does volume testing has to populate a huge volume of data in a database and monitors the behavior of a system. It checks if the system can handle and process the data correctly without issues. To learn more you can check our detailed Volume Testing tutorial.

#8. Capacity Testing

Capacity Testing is to determine how many users a system/application can handle successfully before the performance goals become unacceptable. This allows us to avoid the potential problems in the future such as increased user base or increased volume of data. It helps users to identify a scaling strategy in order to determine whether a system should scale up or scale out. It is done majorly for eCommerce and Banking sites. are some examples. This testing is sometimes called Scalability testing. To learn more you can check our detailed Capacity testing tutorial.

Read more: 100+ Types of Software Testing

Difference between Functional Testing and Non-functional Testing?

| Functional Testing | Non-functional Testing |

|---|---|

| What the system actually does is functional testing | How well the system performs is non-functionality testing |

| To ensure that your product meets customer and business requirements and doesn’t have any major bugs | To ensure that the product stands up to customer expectations |

| To verify the accuracy of the software against expected output | To verify the behavior of the software at various load conditions |

| It is performed before non-functional testing | It is performed after functional testing |

| Example of functional test case is to verify the login functionality | Example of non-functional test case is to check whether the homepage is loading in less than 2 seconds |

| Testing types are • Unit testing • Smoke testing • User Acceptance • Integration Testing • Regression testing • Localization • Globalization • Interoperability | Testing types are • Performance Testing • Volume Testing • Scalability • Usability Testing • Load Testing • Stress Testing • Compliance Testing • Portability Testing • Disaster Recover Testing |

| It can be performed either manual or automated way | It can be performed efficiently if automated |

Difference between Performance Testing, Load Testing & Stress Testing

Performance Testing

In software, performance testing (also called Perf Testing) determines or validates the speed, scalability, and/or stability characteristics of the system or application under test. Performance is concerned with achieving response times, throughput, and resource-utilization levels that meet the performance objectives for the project or product.

Performance testing is conducted to mitigate the risk of availability, reliability, scalability, responsiveness, stability etc. of a system.

Performance testing encompasses a number of different types of testing like load testing, volume testing, stress testing, capacity testing, soak/endurance testing and spike testing each of which is designed to uncover or solve performance problems in a system.

Load Testing

Load Testing is to verify that a system/application can handle the expected number of transactions and to verify the system/application behavior under both normal and peak load conditions (no. of users).

Stress Testing

Stress Testing is to verify the behavior of the system once the load increases more than the system’s design expectations. This testing addresses which components fail first when we stress the system by applying the load beyond the design expectations. So that we can design a more robust system.

Performance Testing vs. Load Testing vs. Stress Testing

| Performance Testing | Load testing | Stress testing |

|---|---|---|

| It is a superset of load and stress testing | It is a subset of performance testing | It is a subset of performance testing |

| Goal of performance testing is to set the benchmark and standards for the application | Goal of load testing is to identify the upper limit of the system, set SLA of the app and check how the system handles heavy load | Goal of stress testing is to find how the system behaves under extreme loads and how it recovers from failure |

| Load limit is both below and above the threshold of a break | Load limit is a threshold of a break | Load limit is above the threshold of a break |

| The attributes which are checked in performance testing are speed, response time, resource usage, stability, reliability and throughput | The attributes which are checked in a load testing are peak performance, server throughput, response time under various load levels, load balancing requirements etc. | The attributes which are checked in a stress testing are stability response time, bandwidth capacity etc., |

Difference between Performance Engineering & Performance Testing?

Performance engineering is a discipline that includes best practices and activities during every phase of the software development life cycle (SDLC) in order to test and tune the application with the intent of realizing the required performance.

Performance testing simulates the realistic end-user load to determine the speed, responsiveness, and stability of the system. It concerned with testing and reporting the current performance of an application under various parameters such as response time, concurrent user load, server throughput etc.

Performance Testing Process

Identify the test environment:

Identify the physical test environment, production environment and know what testing tools are available. Before beginning the testing process, understand details of the hardware, software and network configurations. This process must be revisited periodically throughout the projects life cycle.

Identify performance acceptance criteria:

This includes goals and constraints for response time, throughput and resource utilization. Response time is a user concern, throughput is a business concern, and resource utilization is a system concern. It is also necessary to identify project success criteria that may not be captured by those goals and constraints.

Plan & Design performance tests:

Identify key scenarios to test for all possible use cases. Determine how to simulate that variability, define test data, and establish metrics to be gathered.

Configure the Test Environment:

Prepare the test environment, arrange tools and other resources before execution

Implement the Test Design:

Develop the performance tests in accordance with the test design

Execute the Test:

Execute and monitor the tests

Analyze Results, Report, and Retest:

Consolidate, analyze and share test results. Fine tune and retest to see if there is an improvement in performance. When all of the metric values are within acceptable limits then you have finished testing that particular scenario on that particular configuration.

Example of Performance Test Cases

Writing test cases for performance testing requires a different mindset compared to writing functional test cases.

Read more: How To Write Functional Test Cases.

- To verify whether an application is capable of handling a certain number of simultaneous users

- To verify whether the response time of an application under load is within an acceptable range when the network connectivity is slow

- To verify the response time of an application under low, normal, moderate and heavy load conditions

- To check whether the server remain functional without any crash under high load

- To verify whether an application reverts to normal behavior after a peak load

- To verify database server and CPU and memory usage of the application under peak load

Critical KPIs in Performance Tests

When conducting performance tests, several key performance indicators (KPIs) help gauge the health and efficiency of an application. These KPIs provide valuable insights into how well the application performs under various conditions. Below are some of the critical KPIs:

- Response Time: This measures the time taken by the application to respond to a user request. A lower response time usually indicates better performance.

- Throughput: This refers to the number of requests the application can handle in a given period. Higher throughput indicates the application can handle more load effectively.

- Latency: This is the delay before the transfer of data begins following an instruction for its transfer. Low latency is crucial for a smooth user experience.

- Error Rate: This KPI measures the percentage of requests that result in errors. A lower error rate signifies a more reliable application.

- Resource Utilization: This includes monitoring CPU, memory, and disk usage. Efficient resource utilization is critical for maintaining performance and avoiding system crashes.

- Concurrent Users: This metric measures the number of users simultaneously interacting with the application without performance degradation.

- Peak Load: This KPI tests the application’s performance under a maximum expected load. It helps in understanding the limits and breaking points of the application.

- Scalability: This measures the application’s ability to handle increased load by adding resources such as servers or memory.

- Apdex Score: The Application Performance Index (Apdex) score reflects user satisfaction based on response times. A higher Apdex score indicates better user satisfaction.

- Uptime: This KPI measures the period the application remains operational and available to users. High uptime is vital for user trust and application reliability.

- Request Rate: This refers to the number of requests sent to the application per second. Monitoring the request rate helps in understanding the traffic load and ensuring the application can handle the demand.

- Session Duration: This measures the amount of time a user spends in a single session on the application. Longer session durations often indicate higher user engagement and satisfaction.

Monitoring these KPIs will help identify performance issues and areas for improvement, ensuring the application delivers a smooth and efficient experience for end-users.

Characteristics of Effective Performance Testing

Effective performance testing has several important characteristics:

- Realistic Scenarios: The tests should mimic real-world usage as closely as possible. This means using actual user scenarios and data to understand how the application performs in everyday situations.

- Consistency: Tests should be run consistently, using the same conditions every time, to ensure that results are reliable and comparable.

- Comprehensive Coverage: All parts of the application should be tested. This includes testing different features, functions, and interactions to identify any potential issues.

- Clear Metrics: There should be clear definitions of what is being measured, such as response times, load times, or error rates. This makes it easier to understand the results.

- Scalability Testing: It should test how well the application handles an increasing number of users. This helps ensure the application can grow with demand.

- Automated Testing: Where possible, automated tools should be used to run tests. This allows for more frequent testing and quick identification of issues.

- Actionable Results: The results should be easy to understand and provide clear guidance on what needs to be improved. This helps in making effective changes to enhance performance.

Why Performance Testing for UI is Critical in Modern Apps

Performance testing for the user interface (UI) is critical in modern applications due to several key factors.

Firstly, the UI is the primary point of interaction between the user and the application, and any lag or delay can significantly impact the user experience. In today’s fast-paced digital environment, users expect responsive and smooth functionality; any deviation can lead to frustration and reduced user satisfaction.

Secondly, a well-optimized UI ensures that the application performs efficiently across various devices and screen sizes, which is crucial given the diversity of user environments. This is especially important for web and mobile apps, where performance can vary widely depending on the hardware and network conditions.

Lastly, UI performance testing helps identify and mitigate potential bottlenecks early in the development process, reducing the risk of deployment delays and costly post-release fixes. By ensuring that the UI remains fast and responsive under different loads and conditions, developers can deliver a superior, consistent user experience, enhancing user retention and engagement.

What is a Performance Test Document?

A performance test document is a detailed plan or record that outlines the procedures, tools, and metrics used to test the performance of an application or system. Its primary purpose is to ensure that the application performs efficiently under various conditions and meets the required performance standards. This document serves as a guide for testers and developers, helping them to systematically identify, analyze, and resolve performance issues.

How to Write a Performance Test Document

- Objective Definition: Start by clearly defining the objectives of the performance test. This includes specifying what aspects of performance you are testing, such as response time, throughput, or resource utilization.

- Scope and Requirements: Outline the scope of the performance test, detailing the features and functionalities to be tested. Include any specific requirements or constraints.

- Test Environment: Describe the test environment, including hardware, software, network configurations, and any other relevant setup details. Ensure that this environment closely mimics the production environment to get accurate results.

- Test Data: Prepare the test data that will be used during the performance test. This data should be representative of real-world scenarios to produce meaningful and reliable outcomes.

- Test Scenarios and Cases: Develop detailed test scenarios and cases that cover various aspects of the application’s performance. Include both normal and peak load conditions to assess how the application performs under different stresses.

- Metrics and Benchmarks: Define the performance metrics and benchmarks you will use to evaluate the application’s performance. Common metrics include response time, throughput, error rates, and resource utilization.

- Tools and Techniques: List the tools and techniques you will use to conduct the performance tests. This could include specific performance testing software, monitoring tools, and any custom scripts or utilities.

- Execution Plan: Provide a step-by-step plan for executing the performance tests. Include timelines, responsible team members, and any dependencies.

- Results Analysis: Detail how you will analyze the test results. This includes the methods for identifying bottlenecks, comparing results against benchmarks, and interpreting data to draw conclusions.

- Reporting: Describe the format and content of the performance test report. This should include an executive summary, detailed findings, recommendations for improvements, and any supporting data or charts.

- Review and Sign-Off: Include a section for reviewing the performance test document and obtaining sign-off from relevant stakeholders, ensuring that all parties agree on the test plan and objectives.

By following these steps, you can create a comprehensive performance test document that will help ensure your application meets its performance targets and provides a smooth user experience.

Effective Performance Testing Strategy

An effective performance testing strategy includes the following components:

- Define Clear Objectives: Establish specific goals for what you want to achieve with performance testing. This includes identifying performance benchmarks and user expectations.

- Create Realistic Test Scenarios: Simulate real-world usage by creating test scenarios that reflect how users interact with the application. This may include peak usage times and various user actions.

- Identify Key Performance Indicators (KPIs): Determine the metrics that will measure the success of your performance tests. Common KPIs include response time, throughput, and error rates.

- Use a Variety of Testing Types: Employ different types of performance testing such as load testing, stress testing, endurance testing, and spike testing to cover all aspects of performance.

- Automate Testing: Utilize automated testing tools to run performance tests efficiently and repeatedly. Automation ensures tests can be executed more frequently without manual effort.

- Monitor System Resources: Keep track of system resource usage like CPU, memory, disk, and network during tests to identify bottlenecks and resource limitations.

- Analyze Test Results: Carefully analyze the data collected during performance tests. Look for patterns, anomalies, and areas where performance can be optimized.

- Optimize and Retest: Make necessary adjustments based on test findings and run tests again to verify improvements. Continuous testing and optimization are key to maintaining performance.

- Incorporate Performance Testing in CI/CD Pipeline: Integrate performance testing into the continuous integration/continuous deployment pipeline to ensure performance checks are part of the development process.

- Reporting: Effective reporting is a crucial aspect of performance testing as it helps communicate findings, insights, and recommendations to both technical and non-technical stakeholders.

By following these steps, you can develop a robust performance testing strategy that helps ensure your application runs efficiently under various conditions.

How to choose the right Performance Testing Tool

There are many tools available in the market for performance testing, making it difficult to pinpoint the best one. Each company has unique needs, so what works perfectly for one may not be suitable for another. Therefore, it’s crucial to conduct thorough analysis before selecting the right tool. Here are some key factors to consider when choosing the best performance testing tool.

- Understand Your Requirements: Identify the specific performance metrics and scenarios you need to test, like load, stress, and scalability.

- Ease of Use: Choose a tool that is user-friendly and has a simple interface, so that team members can quickly learn and use it.

- Scalability: Pick a tool that can simulate the number of users you expect and handle increased testing loads.

- Compatibility: Make sure the tool supports your application’s technology stack, including programming languages and frameworks.

- Hardware and Software Requirements: Assess the hardware and software prerequisites of the tool. Ensure that your existing infrastructure can support the tool without requiring additional investment in new hardware or software upgrades.

- Protocol Support: Verify that the tool supports the protocols and technologies your application uses. Whether it’s HTTP, HTTPS, WebSockets, or custom protocols, the tool should be compatible with your application’s stack to provide accurate testing.

- Reporting Features: Look for tools that provide detailed and customizable reports to help you analyze test results effectively.

- Community and Reviews: Check reviews and find communities online to see other users’ experiences and recommendations.

- Budget: The cost of performance testing tools can vary widely. It’s crucial to identify how much your organization is willing to spend. Some tools offer free versions or trials, while others require substantial investment. Ensuring the tool fits within your budget without compromising on necessary features is paramount.

- Trial or Free Version: If available, use a trial or free version of the tool to test it within your environment before committing to a purchase.

- Types of License: Performance testing tools come with different licensing models, including open source, perpetual licenses, subscription-based, and pay-as-you-go models. Assess your long-term needs to determine which licensing model offers the best value and flexibility.

- Cost Involved in Training: Evaluate the complexity of the tool and the training required for your team to use it effectively. Some tools might require extensive training, increasing their overall cost. Consider tools with comprehensive documentation, tutorials, and user communities that can facilitate easier learning.

- Integration: Choose a tool that integrates well with your existing CI/CD pipelines and other development tools.

- Support and documentation: Reliable support from the tool vendor can be a critical factor, especially when dealing with complex issues. Look for tools that offer robust technical support, including documentation, user guides, forums, and direct support channels like chat or phone support.

By considering these factors, you can select a performance testing tool that best fits your needs and helps ensure your application’s reliability and efficiency.

What are some popular Performance Testing Tools to do Performance Testing?

There are a lot of performance testing tools in the market. There are free website load testing tools, paid tools and freemium tools. Almost all the commercial performance testing tools have a free trial. You can get a chance to work hands-on before deciding which is the best tool for your needs.

Some of the popular performance testing tools are

- LoadRunner: A widely-used performance testing tool from Micro Focus, LoadRunner is known for its ability to simulate thousands of users concurrently. It provides comprehensive support for complex architectures and technologies, making it a powerful tool for large enterprises.

- Apache JMeter: An open-source performance testing tool, Apache JMeter is popular due to its flexibility and extensibility. It supports various protocols such as HTTP, FTP, JDBC, and more, making it suitable for testing numerous types of applications.

- NeoLoad: Developed by Neotys, NeoLoad is a load testing tool that aims to provide fast and realistic test configuration and execution for web and mobile applications. It focuses on continuous testing and can integrate with CI/CD pipelines.

- StresStimulus: Known for its user-friendly interface, StresStimulus helps identify performance issues in web applications. It supports load testing through multiple scenarios and can detect and fix bottlenecks.

- LoadUI Pro: Part of the ReadyAPI platform, LoadUI Pro is ideal for API load testing. It enables users to create complex load test scenarios with GUI-based testing and offers deep analytics to identify performance issues.

- WebLOAD: A performance testing tool that excels in testing complex web applications, WebLOAD offers robust support for testing heavy user loads. It provides analytics, real-time performance data, and integrates with continuous integration systems.

- Rational Performance Tester: From IBM, Rational Performance Tester is designed for automated performance testing of web and server-based applications. It provides detailed reports and diagnostics for identifying the causes of performance issues.

- AppLoader: A tool designed for testing applications in real-world scenarios, AppLoader simulates users interacting with applications through their usual interfaces. It offers an end-to-end performance testing solution without requiring any coding skills.

- SmartMeter.io: Based on JMeter, SmartMeter.io adds enhanced test creation, execution, and result analysis capabilities. It focuses on providing easier test configuration and better scalability.

- Silk Performer: A performance and load testing solution from Micro Focus, Silk Performer supports a wide range of protocols and environments. It provides extensive analytics and reporting to help diagnose performance issues.

- StormRunner Load: A cloud-based performance testing tool from Micro Focus, StormRunner Load offers on-demand load testing with real-time analytics. It is suitable for testing web and mobile applications and can scale to simulate a high number of users.

- LoadView: A fully managed, cloud-based load testing tool, LoadView allows users to test websites, web applications, and APIs with real-world protocols and browsers. It provides detailed performance metrics and visualizations to help pinpoint issues.

These tools cater to different requirements and come with unique features that make them suitable for various scenarios and organizational needs.

View our full list of Popular Performance Testing Tools.

Conclusion

In conclusion, performance testing is a crucial aspect of the software development lifecycle. Throughout this article, we discussed what performance testing entails, its significance in ensuring robust and efficient applications, the various types of performance testing, and the key factors to consider when selecting performance testing tools. By thoroughly understanding and implementing effective performance testing strategies, organizations can enhance their software’s reliability, scalability, and overall user experience. Remember, the right tool is vital for successful performance testing, so invest the time to analyze your specific needs and choose wisely. This will ultimately help in meeting business objectives and maintaining a competitive edge in the market.

Frequently Asked Questions

What is Cloud-based Performance Testing?

Cloud-based performance testing is a method of testing how an application performs using cloud services. Instead of using traditional, physical hardware, testers use resources and tools provided by cloud service providers like AWS, Azure, or Google Cloud. This type of testing helps determine if an application can handle different amounts of user traffic and data processing in a scalable and flexible way. Cloud-based performance testing is often more cost-effective and faster because it uses the vast resources of the cloud, allowing tests to be run from multiple locations and simulating real-world conditions.

Does performance testing require coding?

Yes, performance testing often requires some coding skills. Test scripts need to be written to simulate different user actions and loads on the application. These scripts are essential for automating tests and checking the app’s performance under various conditions. However, many performance testing tools offer user-friendly interfaces with record-and-playback features, which reduce the need for deep coding knowledge. While basic coding skills can help in customizing tests and achieving more accurate results, some tools make it possible to conduct performance testing with minimal coding.

Why Automate Performance Testing?

Automating performance testing saves time and effort. It helps find problems early and fix them quickly. This ensures that apps work well and can handle lots of users. Automation also makes the testing process faster and more accurate, leading to better software quality.

What is the difference between concurrent users and simultaneous users in performance testing?

In performance testing, concurrent users are those who are active on the system at the same time, but they might not perform the exact actions simultaneously. Simultaneous users, on the other hand, are those who perform actions at the very same moment. For example, if 100 users log in at 9 AM, they are concurrent users. If 100 users hit the “Submit” button at the exact same second, they are simultaneous users.

What are the key metrics to consider in server performance monitoring during performance testing?

When monitoring server performance during performance testing, it is important to consider several key metrics:

Response Time: How long it takes for the server to respond to a request.

Throughput: The number of requests the server can handle per second.

CPU Usage: The percentage of the CPU’s capacity that the server is using.

Memory Usage: The amount of memory the server is using.

Disk I/O: The read and write operations on the server’s disk.

Error Rate: The number of errors that occur during the server’s operations.

These metrics help in understanding the server’s performance and identifying any potential bottlenecks.

Related posts:

- Popular Performance Testing Tools

- Penetration Testing Tutorial

- Security Testing Tutorial

- Popular Penetration Testing Tools

- Popular Security Testing Tools

- Load Testing Tutorial

- Stress Testing Tutorial

- Endurance Testing Tutorial

- Volume Testing Tutorial

- Penetration Testing Tutorial