Top 60+ Artificial Intelligence Interview Questions & Answers

AI interviews can be challenging. You need a solid grasp of complex concepts and practical skills. Whether you’re gearing up for an AI interview or interviewing AI engineers, this list of Artificial Intelligence interview questions and answers will be super helpful.

Knowing what to expect gives candidates an edge and helps interviewers gauge skills and knowledge. It’s always good to know potential questions or what to ask in an AI interview.

Basic AI Interview Questions & Answers For Freshers

Question #1. What is Artificial Intelligence, and what are its applications?

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. These intelligent systems are capable of performing tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

Question #2. What are some real-life applications of Artificial Intelligence?

The applications of AI are vast and varied, spanning numerous industries and aspects of everyday life.

- Healthcare: AI is used for diagnostic purposes, predicting patient outcomes, and personalizing treatment plans. For instance, AI-powered imaging systems assist in identifying diseases like cancer at early stages.

- Finance: AI algorithms are extensively used in credit scoring, fraud detection, and automated trading to enhance efficiency and security.

- Transportation: Self-driving cars and drones utilize AI for navigation, obstacle detection, and route optimization.

- Retail: AI enhances customer experiences through personalized recommendations, inventory management, and automated customer service via chatbots.

- Manufacturing: AI-driven robots and predictive maintenance systems improve production efficiency and reduce downtime.

- Education: AI is used for personalized learning, automated grading, and helping identify students who need additional support.

- Entertainment: AI powers recommendation systems for streaming services, video games with adaptive difficulty, and even creative endeavors like music composition and scriptwriting.

- Home automation: AI enables smart home devices that learn user preferences to control lighting, temperature, and security systems autonomously.

- Agriculture: AI is applied in precision farming, crop monitoring, and even predicting yields, thereby increasing productivity and reducing costs.

- Energy: AI optimizes grid management, forecasts energy demands, and integrates renewable energy sources efficiently.

Learn more: How Does AI Work?

Question #3. Are artificial intelligence and machine learning the same?

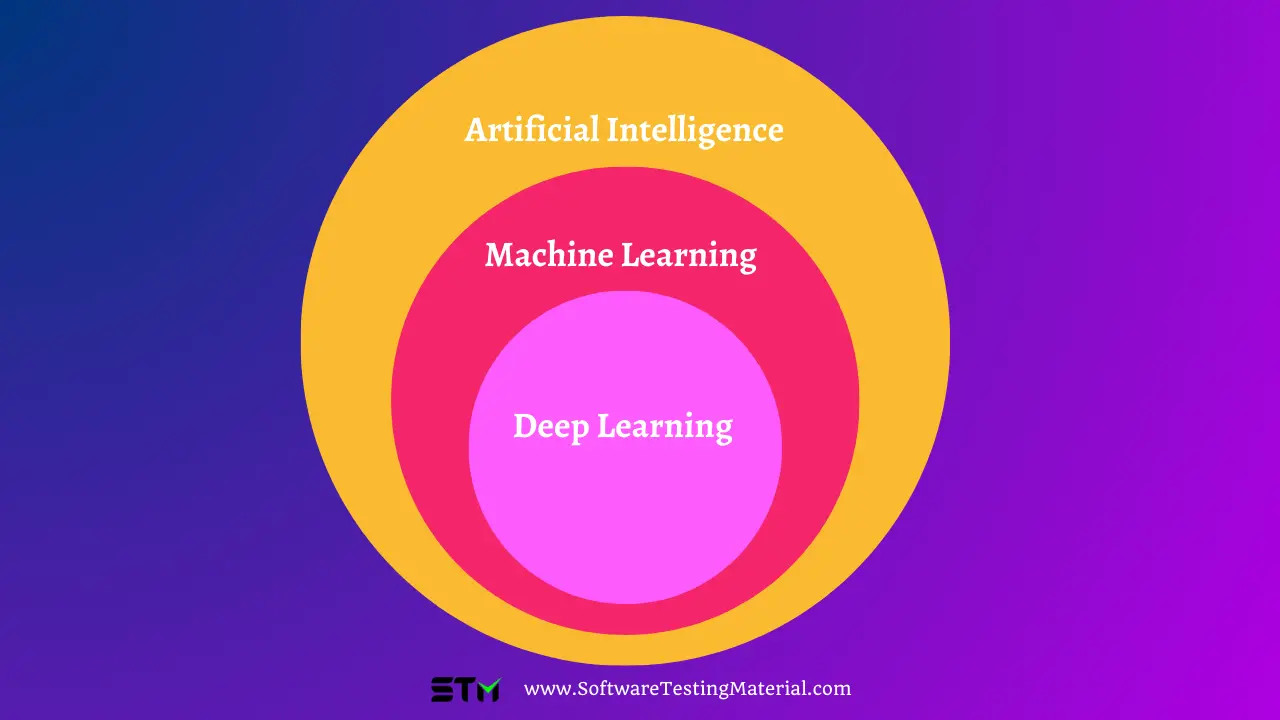

While artificial intelligence (AI) and machine learning (ML) are related, they are not the same.

AI is a broad field that encompasses various technologies aimed at creating systems capable of performing tasks that would typically require human intelligence, such as decision-making, language understanding, and problem-solving.

Machine learning, on the other hand, is a subset of AI that focuses on the development of algorithms that allow systems to learn from and make predictions based on data. Essentially, ML is one of the techniques used to achieve AI.

Question #4. What are the types of artificial intelligence?

AI can be categorized into two main types: narrow AI (weak AI), which is designed for a specific task, and general AI (strong AI), which has the ability to perform any intellectual task that a human can.

Must read: Weak AI vs. Strong AI

Question #5. What is Deep Learning?

Deep learning is a subset of machine learning, which itself falls under the broader category of artificial intelligence (AI). In essence, deep learning involves neural networks with three or more layers, which enable the modeling of complex patterns in data. These neural networks, inspired by the structure of the human brain, consist of interconnected layers of nodes, where each node represents a mathematical function that processes input data to produce an output. Deep learning excels in tasks such as image and speech recognition and natural language processing by leveraging large datasets and substantial computational power to iteratively improve model accuracy and performance.

Question #6. What is Machine Learning?

Machine learning is a branch of artificial intelligence (AI) that focuses on the development of algorithms and statistical models that enable computers to perform tasks without explicit instructions. Instead, machine learning systems learn from data by identifying patterns and making predictions or decisions based on that information.

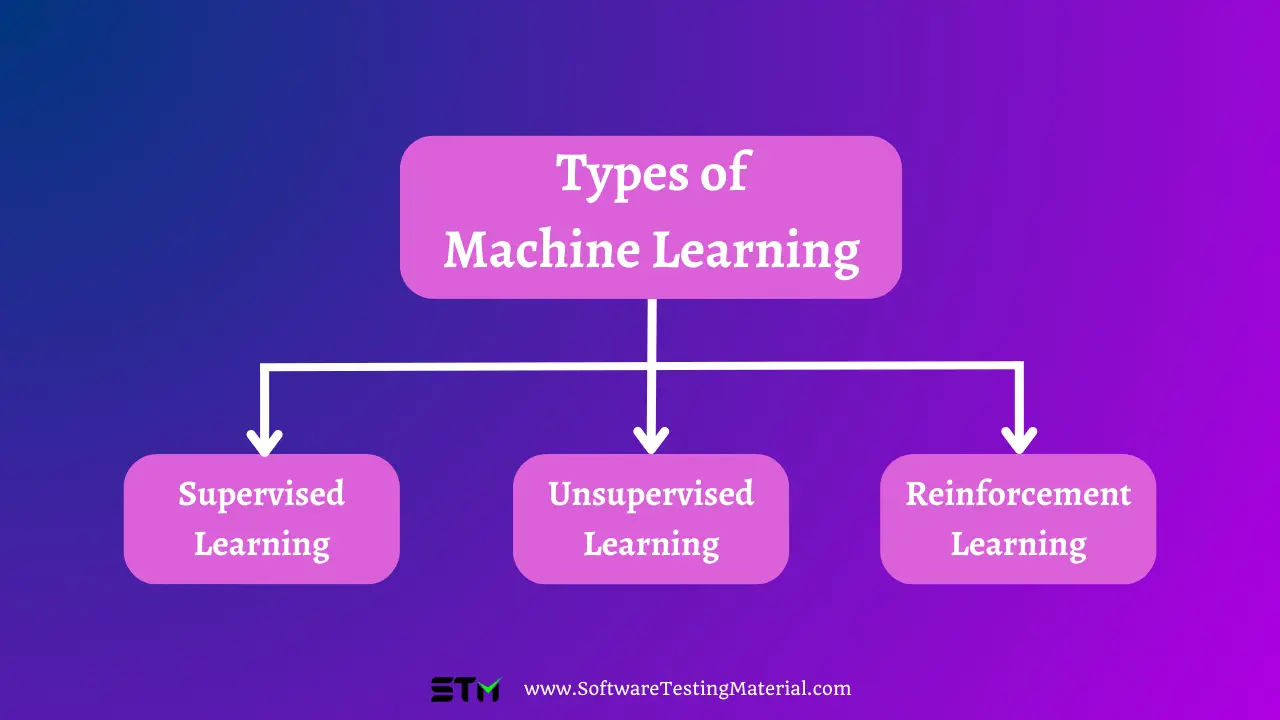

Question #7. What are the types of Machine Learning?

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning uses labeled data to train models, unsupervised learning finds hidden patterns in unlabeled data, and reinforcement learning employs a trial-and-error approach to optimize actions.

Common applications of machine learning include recommendation systems, fraud detection, and predictive analytics.

Question #8. What is Reinforcement Learning?

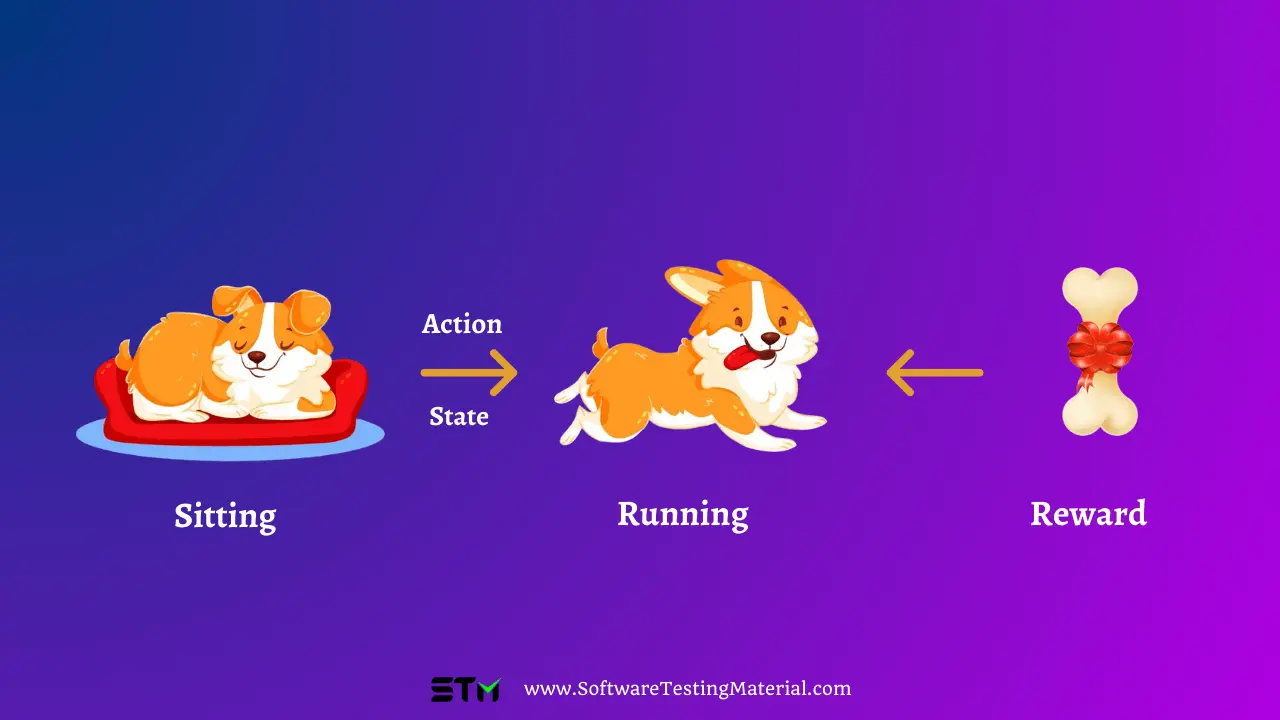

Reinforcement Learning is a type of machine learning where an agent learns to make decisions by performing certain actions and receiving rewards or penalties in return.

Imagine training a dog: you give it a reward when it does something good and nothing or a small penalty when it does something wrong. Over time, the dog learns to perform the actions that earn it treats.

Similarly, in Reinforcement Learning, the agent learns the best actions to take in different situations to maximize its overall rewards.

Question #9. What are the differences between Deep Learning and Machine Learning

| Feature | Machine Learning | Deep Learning |

|---|---|---|

| Definition | A subset of AI that focuses on developing algorithms that allow computers to learn from data without being explicitly programmed. | A subset of machine learning involving neural networks with three or more layers to model complex patterns in data. |

| Data Dependency | Can work with smaller sets of data and often requires feature extraction. | Requires large amounts of data to train effectively and processes raw data directly. |

| Feature Engineering | Requires significant manual feature extraction and selection. | Automatically performs feature extraction through neural networks. |

| Execution Time | Generally faster in training and execution, especially with smaller datasets. | Typically requires more time and computational power due to its complex architecture and large datasets. |

| Applications | Used for tasks like recommendation systems, fraud detection, and predictive analytics. | Excels in tasks like image and speech recognition, natural language processing, and autonomous driving. |

| Interpretability | Often results in models that are easier to interpret and understand. | Creates models that can be more challenging to interpret, often treated as “black boxes.” |

| Computational Power | Less demanding in terms of computational resources and can be executed on standard CPUs. | Requires substantial computational power, often leveraging GPUs or TPUs for training. |

Question #10. What is Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and humans through natural language. The ultimate objective of NLP is to enable computers to understand, interpret, and generate human language in a way that is both meaningful and useful.

Key applications of NLP include language translation, sentiment analysis, speech recognition, and chatbots, among others.

By leveraging sophisticated algorithms and large datasets, NLP systems can analyze nuances, context, and semantic meaning, thereby facilitating more natural and intuitive human-computer interactions.

Question #11. What is Natural Language Understanding (NLU)

Natural Language Understanding (NLU) is a crucial subset of Natural Language Processing (NLP) that focuses specifically on the comprehension aspect of human language. NLU systems aim to comprehend the intent behind the text, interpreting the contextual nuances and semantic meaning. This involves breaking down human language into its components, such as syntax, entities, and relationships, to accurately grasp what is being communicated.

Key tasks in NLU include entity recognition, sentiment analysis, intent detection, and disambiguation.

By empowering machines with the ability to understand the subtleties of language, NLU forms the backbone of technologies like virtual assistants, automated customer service agents, and advanced language translation systems.

Question #12. What’s the difference between NLP and NLU?

| Aspect | Natural Language Processing (NLP) | Natural Language Understanding (NLU) |

|---|---|---|

| Focus | Broader scope, including both understanding and generation of human language | Specifically on understanding and interpreting human language |

| Key Tasks | Language translation, speech recognition, text generation, sentiment analysis | Entity recognition, intent detection, sentiment analysis, disambiguation |

| Objective | Enabling computers to process and generate natural language in a meaningful way | Enabling computers to comprehend the intent and context behind human language |

| Techniques Used | Statistical methods, machine learning, deep learning, rule-based systems | Semantic analysis, contextual interpretation, knowledge representation |

| Application Examples | Chatbots, machine translation, summarizes, automated writing, voice-activated assistants | Virtual assistants, chatbots, sentiment analysis tools, and advanced language translators |

Question #13. What are Neural Networks?

Neural Networks are a class of machine learning algorithms inspired by the structure and function of the human brain. These computational models consist of interconnected layers of nodes, or “neurons,” that process data in a sequential manner. Each neuron receives input, processes it using a set of weights and biases, passes it through an activation function, and then relays the output to the subsequent layer. This architecture allows neural networks to learn and identify complex patterns in data through a process called training, which involves adjusting the weights based on the error of the predictions. Neural networks are the backbone of deep learning technologies and have been instrumental in achieving significant advancements in fields such as image and speech recognition, natural language processing, and autonomous systems.

Question #14. What are the different platforms for Artificial Intelligence (AI) development?

When embarking on AI development, choosing the right platform is crucial for successfully implementing AI solutions. Some popular platforms for AI development include:

- TensorFlow: Developed by Google, TensorFlow is an open-source platform that provides a comprehensive ecosystem of tools, libraries, and community resources, enabling developers to build and deploy machine learning models effectively.

- PyTorch: An open-source framework created by Facebook’s AI Research lab, PyTorch offers a robust and flexible platform particularly appreciated for its dynamic computational graph, which allows for rapid prototyping.

- IBM Watson: Known for its strong natural language processing capabilities, IBM Watson provides extensive APIs and services designed to facilitate the development of sophisticated AI applications.

- Microsoft Azure Machine Learning: This cloud-based service offers a range of tools for building, training, and deploying machine learning models, benefiting from Microsoft’s extensive cloud infrastructure and integration with other Azure services.

- Google Cloud AI Platform: This comprehensive suite combines the power of TensorFlow with Google Cloud services to deliver scalable, high-performance AI applications.

- Amazon SageMaker: A fully managed service provided by AWS, Amazon SageMaker simplifies the process of building, training, and deploying machine learning models at scale, coupled with extensive toolsets for data labeling and workflows.

- H2O.ai: An open-source AI platform renowned for its ease of use, H2O.ai offers scalable machine learning and deep learning solutions, focusing on user-friendly interfaces and automated machine learning capabilities.

Each of these platforms brings its own strengths and specialized tools, allowing developers to choose according to their specific project needs and expertise.

Question #15. What are the programming languages used for Artificial Intelligence?

Some of the programming languages used for Artificial Intelligence are

- Python

- R

- Java

- C++

- Julia

- Lisp

- Prolog

- Scala

- Haskell

Artificial Intelligence Interview Questions for Experienced

Question #16. What is Q-Learning?

Q-Learning is a type of machine learning algorithm used in the field of reinforcement learning. It helps a computer or an artificial agent make decisions by learning the best actions to take in a given situation to achieve a goal. The algorithm works by interacting with the environment, receiving feedback in the form of rewards or penalties, and then updating its knowledge to maximize future rewards. This process continues until the agent can make optimal decisions. Essentially, Q-Learning enables a system to learn from its experiences, improving its performance over time.

Question #17. What are some of the main challenges in Artificial Intelligence?

Some of the main challenges in Artificial Intelligence include:

- Data Privacy and Security: Ensuring that AI systems do not misuse personal data and protect user privacy is crucial.

- Bias and Fairness: AI algorithms can sometimes be biased, reflecting the prejudices found in their training data. Ensuring fairness and avoiding discrimination is a significant challenge.

- Interpretability: Understanding how AI systems make decisions is difficult, especially with complex models like deep learning. This lack of transparency can be problematic in critical applications.

- Ethical Concerns: The ethical implications of AI, such as job displacement and its impact on society, need to be addressed thoughtfully.

- Scalability: Developing AI systems that can scale efficiently and handle large amounts of data without significant performance loss is another hurdle.

Question #18. Which assessment is used to test the intelligence of a machine? Can you explain it?

The Turing Test is used to assess the intelligence of a machine. It was proposed by Alan Turing in 1950. In this test, a human evaluator interacts with both a human and a machine through text-based communication. If the evaluator cannot reliably tell which is which, the machine is considered to have demonstrated human-like intelligence. The idea is to see if the machine can mimic human responses well enough to be indistinguishable from a real person.

Question #19. Can you explain what a Markov Decision Process (MDP) is?

A Markov Decision Process (MDP) is a way to describe a decision-making problem where outcomes are partly random and partly under the control of a decision maker. It is used in the fields of mathematics, economics, and artificial intelligence. An MDP consists of four main components: states, actions, rewards, and transitions.

- States represent different situations or configurations the decision-maker might find themselves in.

- Actions are the choices available to the decision-maker at any given state.

- Rewards are the feedback or returns received after taking an action.

- Transitions describe the probabilities of moving from one state to another after taking a certain action.

The goal in an MDP is to find a strategy or policy that maximizes the total reward over time, guiding the decision-maker on which actions to take in different states for the best possible outcome.

Question #20. What is a Hidden Markov Model (HMM)?

A Hidden Markov Model (HMM) is a statistical model used to represent systems that are assumed to be a Markov process with hidden states. This means there are states you cannot directly observe, but you can see some evidence or observations that give you clues about those states. An HMM includes:

- States: The different situations or conditions that the system can be in, though you can’t observe them directly.

- Observations: The visible part you can see, which gives you information about the hidden states.

- Transition probabilities: The chances of moving from one hidden state to another.

- Emission probabilities: The probabilities of seeing a particular observation from a given hidden state.

In simpler terms, think of an HMM like trying to figure out the weather (hidden states) by only looking at someone’s outfit (observations). The model helps predict the hidden states by analyzing the patterns and probabilities of the observations.

Question #21. What is the Difference Between Parametric and Non-parametric Models?

| Aspect | Parametric Models | Non-parametric Models |

|---|---|---|

| Definition | Assume a specific form for the function or distribution. | Do not assume any specific form and are more flexible. |

| Complexity | Simpler, with fewer parameters to estimate. | More complex, often requiring larger datasets. |

| Flexibility | Less flexible due to predefined form. | More flexible as they adapt to the data structure. |

| Assumptions | Strong assumptions about the data distribution. | Fewer assumptions about the underlying data distribution. |

| Examples | Linear regression, Logistic regression, Normal distribution | K-nearest neighbors, Decision trees, Kernel density estimation. |

| Data Requirements | Can work well with smaller datasets. | Typically requires a larger dataset to perform well. |

| Estimation | Estimates a fixed number of parameters. | Can have an infinite-dimensional parameter space. |

| Overfitting | Less prone to overfitting with adequate assumptions. | Can be more prone to overfitting if not managed properly. |

Question #22. What is the Minimax Algorithm?

The Minimax algorithm is a decision-making tool used in artificial intelligence, particularly in game theory and computer chess. It helps determine the optimal move for a player, assuming that the opponent is also playing optimally. The main goal of the algorithm is to minimize the possible loss for a worst-case scenario. Essentially, it aims to maximize the minimum gain.

Terminologies Involved in a Minimax Problem

- Game Tree: A representation of possible moves in a game, with each node representing a game state and each edge representing a move.

- Minimizer: One of the players in the game who tries to minimize the score or gain of the maximizer. This player assumes the role of the opponent.

- Maximizer: The player who tries to maximize their own score or gain. This player is typically the one using the Minimax algorithm.

- Utility Function: Also known as the payoff function, it assigns a numerical value to the outcome of the game, representing the benefit or cost to a player.

- Pruning: A technique to reduce the number of nodes evaluated in the search tree, making the algorithm more efficient. One common method of pruning is Alpha-Beta pruning.

- Depth: The level of the game tree being evaluated. Often, the Minimax algorithm doesn’t evaluate the entire tree due to resource constraints; hence, it operates up to a certain depth.

- Terminal State: The end state of the game where no further moves are possible. This state helps calculate the utility value for the Minimax algorithm.

Question #23. What do You Understand by Reward Maximisation?

Reward maximisation is a concept used in decision-making processes, particularly in fields like artificial intelligence and economics. In simple terms, it refers to the strategy where an individual or an algorithm aims to achieve the highest possible reward or benefit from a set of actions. This is done by evaluating the potential outcomes and choosing the actions that will lead to the maximum gain. In the context of AI, for example, a system might use reward maximisation to learn which behaviours lead to the best results and then prioritise those actions in the future.

Question #24. What is Overfitting?

Overfitting happens when a model learns the details and noise in the training data to the extent that it performs well on the training data but poorly on new, unseen data. This means that the model is too complex and has captured irrelevant information, reducing its generalization ability. Essentially, it’s like being great at memorizing specific questions for a test but struggling with similar questions that weren’t part of the initial study material. Overfitting can be managed by using techniques such as cross-validation, pruning, and regularization.

Question #25. What are the Techniques Used to Avoid Overfitting?

To avoid overfitting, several techniques can be employed:

- Cross-Validation: Splitting the data into parts, training the model on some parts and validating on others to ensure the model performs well on different sets of data.

- Pruning: Cutting back the complexity of the model, such as by removing branches in a decision tree that do not provide additional power.

- Regularization: Adding a penalty for larger model coefficients to simplify the model.

- Early Stopping: Halting the training process before the learner begins to learn noise.

- Reduced Feature Complexity: Limiting the number of features in the model to only those that provide meaningful information.

- Data Augmentation: Increasing the size of the training data by making slight modifications to data points to teach the model to generalize better.

These methods help ensure the model generalizes well to unseen data and doesn’t just memorize the training dataset.

Question #26. Can you explain in simple terms the difference between Natural Language Processing (NLP) and Text Mining?

Natural Language Processing, or NLP, is about teaching computers to understand and interact with human language. It’s like giving machines the ability to read, listen, and make sense of our words, whether we’re talking or writing. On the other hand, Text Mining is more about finding useful information from large amounts of text. It’s like digging through piles of documents to find hidden patterns, trends, or facts. So, while NLP is focused on communication and understanding, Text Mining is focused on analysis and discovery.

Question #27. Can you explain what Fuzzy Logic is in simple terms?

Fuzzy Logic is a way of thinking that allows for more flexible and approximate reasoning, similar to human decision-making. Unlike traditional logic that only accepts absolute true or false values (like 1 or 0), Fuzzy Logic allows for values in between 1 and 0. This means that instead of saying something is either hot or cold, Fuzzy Logic can say it’s partially hot and partially cold at the same time. This method is often used in systems that need to handle uncertainty and imprecise information, like climate control systems in cars or washing machines.

Question #28. What is the Difference Between Eigenvalues and Eigenvectors?

Eigenvalues and eigenvectors are concepts from linear algebra commonly used in various fields like physics, engineering, and computer science. In simple terms, an eigenvalue is a special number that is associated with a matrix and a particular vector. An eigenvector is that special vector which, when multiplied by the matrix, results in a new vector that is a scaled version of the original eigenvector. The scaling factor is the eigenvalue. Think of it this way: if you have a transformation represented by a matrix and you apply it to an eigenvector, the vector doesn’t change its direction, only its length, which is scaled by the eigenvalue. Thus, eigenvalues tell us “how much” the eigenvector is stretched or shrunk during the transformation.

Question #29. What are Some Differences Between Classification and Regression?

Classification and Regression are both types of supervised learning techniques used in machine learning, but they serve different purposes.

Classification is used to predict categorical outcomes. In other words, it’s about assigning labels from a predefined set of categories. For instance, you might use classification to determine whether an email is spam or not, or whether a photo contains a cat or a dog. The outcome is discrete and finite.

Regression, on the other hand, is used to predict numerical, continuous outcomes. It’s about finding the relationship between variables and predicting a number. For example, you might use regression to predict the price of a house based on its features like size and location, or to forecast temperatures.

To summarize, Classification deals with predicting categories, while Regression deals with predicting numerical values.

Question #30. What is an Artificial Neural Network? What are Some Commonly Used Artificial Neural Networks?

An Artificial Neural Network (ANN) is a computing system inspired by the biological neural networks that make up animal brains. It is composed of interconnected units called neurons that work together to process information. These neurons are organized into layers: an input layer, one or more hidden layers, and an output layer. Each connection between neurons has a weight that is adjusted during the learning process to help the neural network make predictions or decisions based on input data.

Some commonly used Artificial Neural Networks include:

- Feedforward Neural Networks (FNN): These are the simplest type of artificial neural network where connections between the nodes do not form a cycle. It generally flows in one direction—from input nodes, through hidden nodes (if any), and then to the output nodes.

- Recurrent Neural Networks (RNN): Designed to recognize patterns in sequences of data, RNNs have connections that can form cycles. This makes them effective for tasks such as speech recognition and time-series prediction.

- Convolutional Neural Networks (CNN): Highly effective for image processing tasks, CNNs use convolutional layers to filter the input data, making them suitable for image and video recognition.

- Generative Adversarial Networks (GANs): These consist of two neural networks, one generating data and the other evaluating it, to create data that is almost indistinguishable from real data. They are used in generating images, videos, and other types of content.

These various types of neural networks are employed in specific domains based on their unique strengths and capabilities.

Question #31. Can you explain what a rational agent is and what rationality means in simple terms?

A rational agent is a type of system or entity, like a robot or computer program, that makes decisions aimed at achieving the best possible outcome based on the information it has. Rationality, in this context, refers to the ability to act in a way that maximizes the chances of success or effectiveness, often by following logical and optimized decision-making strategies. In essence, a rational agent uses reasoning to make the best choices to reach its goals.

Question #32. What is Game Theory?

Game Theory is a field of study that examines how individuals make decisions in strategic situations where the outcome of one person’s choice depends on the actions of others. It’s like studying a game where each player thinks about what others might do and tries to make the best move. This theory helps in understanding and predicting behaviors in competitive and cooperative environments, such as business negotiations, economics, and even social interactions.

Question #33. Can you explain how Game Theory and AI are related?

Game Theory and AI are closely related because both involve making decisions in strategic situations. In simple terms, they deal with situations where the outcome depends on the choices made by different players or agents. AI uses principles from Game Theory to predict and understand how these players or agents will behave. For instance, in AI, Game Theory can help in designing algorithms for negotiation, competition, and cooperation, enabling machines to make smarter decisions by anticipating the actions and reactions of others. This combination allows AI systems to be more effective in complex, dynamic environments like economics, robotics, and multi-agent systems.

Question #34. What are feature vectors in the context of Machine Learning?

In simple terms, a feature vector is a list of numbers that represent important characteristics or properties of an object that you are trying to analyze with a machine learning model. Think of it as a way to convert things like images, text, or any data into a format that the machine can understand and work with. Each number in the list corresponds to a particular feature of the object, like its size, color, or shape. By using feature vectors, the machine learning model can compare these characteristics and make decisions based on them, such as classifying objects, making predictions, or identifying patterns.

Question #35. What are Generative Adversarial Networks (GANs) and how do they work?

Generative Adversarial Networks, or GANs, are a type of deep learning model used in machine learning. They consist of two neural networks that are set up to compete against each other. One network is called the Generator, and it creates data that mimics real examples. The other network is called the Discriminator, and its job is to figure out if the data is real or created by the Generator.

Here’s how they work: the Generator starts by making fake data, and the Discriminator tries to tell if that data is fake or real. Initially, the Generator might not produce very convincing data, so the Discriminator has an easy job. But over time, the Generator improves by learning from its mistakes and tries to make more realistic data to fool the Discriminator. This competition keeps going, with both networks getting better at their tasks, until the Generator produces very realistic data. This technique is often used for creating realistic images, videos, and other types of data.

Question #36. What is Transfer Learning and what are its advantages?

Transfer learning is a machine learning technique where a model trained on one task is reused on a different but related task. Think of it like learning to play the guitar and then using that knowledge to learn the piano faster. The idea is to transfer the knowledge gained from the first task to improve the performance on the new task.

Here are some advantages of transfer learning:

- Faster Training: Since the model has already learned some features from the initial task, it can learn the new task more quickly.

- Reduced Data Requirements: Transfer learning can be effective even with less data, which is helpful when you don’t have a large dataset for the new task.

- Improved Performance: Models using transfer learning often perform better because they leverage pre-existing knowledge.

- Resource Efficiency: It saves computational resources because you don’t need to train a model from scratch.

In summary, transfer learning makes it easier and more efficient to develop models for new tasks by reusing and adapting pre-trained models.

Question #37. What is the difference between symbolic and connectionist AI?

The difference between symbolic and connectionist AI comes down to how they approach problem-solving and learning.

Symbolic AI, also known as “Good Old-Fashioned AI” (GOFAI), uses explicit rules and logic to represent knowledge. Think of it like using a detailed recipe to cook a dish. The AI follows step-by-step instructions given by humans to solve problems. This approach makes it easier to understand how the AI reaches its conclusions, but it can be limited in handling complex or ambiguous situations.

Connectionist AI, on the other hand, is inspired by how the human brain works. It mainly uses neural networks to learn from data. Imagine teaching someone to cook by showing them lots of examples rather than giving them a recipe. The AI learns patterns from the data to make decisions, which can be more flexible and powerful for certain tasks. However, it often acts like a “black box,” meaning it can be hard to understand exactly how it reaches its conclusions.

To sum it up, symbolic AI uses logic and rules, while connectionist AI learns from data to mimic the brain’s way of processing information.

Question #38. What are the ethical considerations in AI?

Ethical considerations in AI focus on ensuring that AI technology is developed and used responsibly and fairly. Here is a clear explanation:

- Bias and Fairness: How do you ensure that your AI system treats all people fairly, without favouring certain groups over others?

- Privacy: What measures do you take to protect people’s personal data when using AI?

- Transparency: How do you make sure that the decisions made by AI systems can be understood and explained?

- Accountability: Who is responsible if an AI system makes a mistake or causes harm?

- Safety: What steps do you take to ensure AI systems do not cause unintended consequences or harm?

Question #39. How can AI be applied in the healthcare sector?

AI can be applied in the healthcare sector in numerous ways to improve patient care and streamline operations. For instance, AI algorithms can analyse medical images, such as X-rays and MRIs, to detect abnormalities more quickly and accurately than human doctors. AI can also help with diagnosing diseases by analysing a patient’s symptoms and medical history to suggest possible conditions. Additionally, AI can assist in personalised medicine by predicting how different patients might respond to various treatments based on their genetic information. Furthermore, AI-powered chatbots can provide instant support and information to patients, making healthcare more accessible. Finally, AI can optimise administrative tasks in hospitals, like scheduling appointments and managing patient records, thus allowing healthcare professionals to focus more on patient care.

Question #40. Can you explain the concept of decision trees in Machine Learning in simple terms?

A decision tree is a type of model used in machine learning that helps make decisions based on input data. Imagine it like a flowchart that starts with a single question or decision point and then branches out into different possible outcomes based on the answers. Each branch represents a possible decision or answer to a question, and each endpoint (or leaf) represents a final decision or prediction. Decision trees are helpful because they break down complex decision-making processes into simpler, more manageable parts, making it easier to understand and interpret the logic behind the decisions. They are widely used for both classification and regression tasks.

Question #41. What are the challenges in Natural Language Processing?

Natural Language Processing (NLP) is a field in artificial intelligence that focuses on the interaction between computers and human language. One of the challenges in NLP is understanding the context and nuance of language, which can vary widely based on cultural and regional differences. Another challenge is dealing with ambiguity, where a single word or phrase can have multiple meanings. Additionally, NLP systems must handle the vast diversity in human language, including slang, idiomatic expressions, and evolving language uses. Lastly, there’s the difficulty of processing and generating human-like text that is coherent and contextually appropriate, which requires advanced understanding and significant computational resources.

Question #42. How is AI used in autonomous vehicles?

AI is used in autonomous vehicles to help the car drive itself without the need for a human driver. It uses a combination of cameras, sensors, and software to understand the environment around the car. The AI system processes data from the sensors to identify objects like other cars, pedestrians, traffic signs, and obstacles. It then makes decisions on how to navigate the road, such as when to stop, go, or change lanes. AI helps the car predict what might happen next, like a pedestrian crossing the street, and ensures that the vehicle reacts safely. This technology aims to make driving safer and more efficient.

Question #43. What is the role of data preprocessing in Machine Learning?

Data preprocessing is a crucial step in machine learning because it prepares raw data for analysis. Before feeding the data into a machine learning model, it needs to be cleaned and transformed to remove any errors or inconsistencies. This process helps in handling missing values, normalizing data ranges, and converting categorical data into a numerical format. Ensuring the data is in the right format improves the performance and accuracy of the machine learning model, making it easier to uncover patterns and make reliable predictions. Could you explain how you would approach data preprocessing for a new dataset?

Question #44. What is the concept of bias-variance tradeoff in Machine Learning?

The bias-variance tradeoff is a key concept in machine learning that refers to the balance between two types of errors that can affect a model’s performance. Bias error occurs when a model is too simple and cannot capture the underlying patterns in the data, leading to high error on both training and test sets (underfitting). Variance error happens when a model is too complex and captures noise along with the patterns, resulting in high error on the test set while performing well on the training set (overfitting). The goal is to find a balance where the model is complex enough to understand the data but simple enough to generalize well to new, unseen data. This tradeoff helps in building a model that performs optimally on both training and test datasets. How would you address the bias-variance tradeoff when training a machine learning model?

Question #45. What is the significance of the A* algorithm in AI?

The A* algorithm is very important in AI because it helps find the shortest path from a starting point to a goal. Imagine you are in a maze and want to find the quickest way out; A* can guide you by considering both the distance already travelled and the estimated distance to the end. This makes it more efficient and smarter than other methods. Can you explain how you would use the A* algorithm to solve a navigation problem?

Question #46. How do you evaluate the performance of an AI model?

Evaluating the performance of an AI model involves several important steps to ensure that the model is making accurate predictions and can generalize well to new data. Some common methods used are:

- Accuracy: This measures the percentage of correct predictions made by the model out of all predictions. It is simple but can be misleading if the classes are imbalanced.

- Precision and Recall: Precision is the percentage of true positive predictions out of all positive predictions made by the model. Recall is the percentage of true positive predictions out of all actual positive cases in the dataset.

- F1 Score: This is the harmonic mean of precision and recall, providing a single metric that balances both, especially useful when the classes are imbalanced.

- Confusion Matrix: This is a table that shows true positives, true negatives, false positives, and false negatives, giving a detailed breakdown of the model’s performance.

- ROC-AUC Curve: The Receiver Operating Characteristic curve plots the true positive rate against the false positive rate, and the Area Under the Curve (AUC) provides a single measure of the model’s performance across different threshold values.

- Cross-Validation: This technique involves dividing the dataset into multiple parts, training the model on some parts, and testing it on others, to ensure that the model performs well on different subsets of the data.

By using these metrics and techniques, you can get a comprehensive understanding of how well your AI model is performing and where it might need improvement.

Question #47. What are the limitations of AI today?

Despite the tremendous progress in artificial intelligence, there are several limitations to consider:

- Lack of Common Sense: AI models can process and analyze data, but they often lack the common sense reasoning that humans naturally possess.

- Data Dependency: AI requires vast amounts of data to learn effectively. Poor quality or biased data can lead to inaccurate or unfair outcomes.

- Interpretability: Many AI models, especially deep learning ones, operate as “black boxes,” making it difficult to understand how they make decisions.

- Ethical Concerns: There are ongoing debates about the ethical implications of AI, including issues of privacy, job displacement, and algorithmic bias.

- Generalization: While AI can perform specific tasks well, it struggles with tasks requiring general intelligence or transferring knowledge across different domains.

- Resource Intensive: Developing and running advanced AI models can be computationally expensive and require substantial resources.

By understanding these limitations, we can set more realistic expectations and work towards overcoming the challenges associated with AI technology.

Question #48. Can you explain how computer vision and AI are related?

Computer vision is a field of artificial intelligence (AI) that enables computers to interpret and make decisions based on visual data from the world, such as images or videos. While AI is the broader concept of machines being able to carry out tasks in a way that we would consider “smart,” computer vision is a specialized branch within AI focused on the visual understanding of information. Essentially, computer vision helps AI to “see” and understand visuals, much like human eyes and brains work together to process and comprehend what we look at every day.

Question #49. Which is better for image classification? Supervised or unsupervised classification? Justify.

Supervised classification is generally better for image classification tasks. In supervised classification, the model is trained using a labeled dataset, which means that every image in the training set is already tagged with the correct label. This labeling allows the model to learn the relationship between the images and their corresponding labels. As a result, supervised models tend to achieve higher accuracy because they have clear guidance on what to look for during the training process. On the other hand, unsupervised classification deals with unlabeled data and the model has to find patterns or groupings on its own, which can be less effective for detailed tasks like image classification. While unsupervised methods are valuable for discovering hidden structures in data, the lack of labels often leads to less accurate classifications compared to supervised learning.

Artificial Intelligence Scenario Based Questions

Question #50. A manufacturing company aims to reduce downtime and lower maintenance costs for their machinery. How can AI assist in achieving these goals?

AI can help a manufacturing company minimize downtime and reduce maintenance costs in several ways. Firstly, AI-powered predictive maintenance systems can analyse data from sensors on machinery to predict when a machine is likely to fail. This allows the company to perform maintenance just in time, before a failure occurs, rather than on a fixed schedule, which can be more costly. Secondly, AI can optimise the maintenance schedule by identifying the most critical machines that need attention, ensuring that resources are used efficiently. Additionally, AI can help identify patterns and root causes of frequent breakdowns, which can lead to long-term improvements and reduce the need for repairs over time. By implementing these AI technologies, the company can keep their machinery running smoothly, minimise unexpected stoppages, and save on maintenance costs.

Question #51. An e-commerce platform seeks to boost sales by providing personalized product recommendations to its users. How can AI be leveraged to elevate their shopping experience?

AI can significantly enhance the shopping experience on an e-commerce platform by providing personalized product recommendations. Using machine learning algorithms, AI can analyze user behavior, such as browsing history, past purchases, and items added to the cart, to understand individual preferences. It can then suggest products that are most likely to interest the user. Furthermore, AI can use data from similar users to recommend trending or complementary items, helping users discover new products they might not have found on their own. By making the shopping experience more tailored and relevant, AI can increase user satisfaction and drive higher sales for the platform.

Question #52. A financial institution faces sophisticated cyber threats that are evolving rapidly. How can AI enhance their cybersecurity measures?

AI can help strengthen the cybersecurity measures of a financial institution by continuously monitoring network activity and identifying suspicious patterns. By using machine learning, AI can learn from past cyber attacks and predict future threats before they occur. This means the institution can respond quickly to potential breaches and prevent data theft or damage. Additionally, AI can automate many security processes, such as scanning for vulnerabilities and applying patches, which helps ensure that the systems are always up-to-date and secure.

Question #53. How can a healthcare provider enhance diagnostic accuracy and patient outcomes using AI?

AI can play a crucial role in improving diagnostic accuracy and patient outcomes by analyzing large datasets to identify patterns and make predictions. For example, AI can review medical images like X-rays or MRIs to detect early signs of diseases such as cancer, which might be missed by human eyes. Additionally, AI can help doctors by providing decision support, suggesting potential diagnoses based on the patient’s symptoms and medical history. This not only speeds up the diagnostic process but also reduces the likelihood of errors, ultimately leading to better patient care and outcomes.

Question #54. A smart city strives to optimize energy consumption and minimize its carbon footprint. How can AI contribute to achieving these objectives?

AI can help a smart city optimize energy consumption and reduce its carbon footprint by analyzing data from various sensors and energy meters. By predicting energy usage patterns, AI can suggest ways to save power, such as turning off streetlights when they’re not needed or adjusting heating and cooling systems based on the weather and building occupancy. AI can also manage renewable energy sources more efficiently, ensuring that solar panels or wind turbines are used to their full potential. This helps the city use less energy overall and lowers its carbon emissions.

Question #55. A marketing agency wants to generate creative content for its clients’ campaigns using AI. How can AI be applied in this context?

AI can assist a marketing agency in generating creative content by using natural language processing and machine learning algorithms to analyze existing successful campaigns and identify patterns. It can then use this data to create new content that resonates with the target audience. For example, AI can generate engaging social media posts, write persuasive ad copy, or come up with innovative ideas for blog articles. Additionally, AI tools can personalize content based on consumer data, ensuring that the messages are relevant and impactful. This not only speeds up the content creation process but also helps in delivering more effective marketing campaigns.

Question #56. How will you leverage AI to promote your new business?

If I were starting a new business, I would use AI to promote it by creating personalized marketing campaigns that target specific groups of potential customers. AI can analyze data from social media, website traffic, and customer feedback to understand what people are interested in and what they need. With this information, I could create tailored advertisements and social media posts that speak directly to my audience’s preferences. AI could also help me optimize my website by suggesting ways to improve user experience and increase online visibility through search engine optimization. Additionally, AI-powered chatbots could provide instant customer support, making it easier for visitors to get the information they need and feel more connected to my brand.

Question #57. How AI helps a farmer who is working hard in the fields but his crop yield is deteriorating?

AI can help the farmer in several ways to improve his crop yield. For instance, AI can analyze data about soil quality, weather conditions, and crop health to provide the farmer with specific recommendations on when to plant and water the crops. It can also identify any pests or diseases affecting the plants early on, allowing for timely intervention. Additionally, AI-powered drones can monitor the fields and provide real-time insights, ensuring that the farmer is making informed decisions to optimize his farming practices.

Question #58. How does the “Customers who bought this also bought” section on e-commerce sites like Amazon work?

This feature on Amazon works through a recommendation system powered by AI. When a customer buys a product, Amazon’s AI analyzes the purchasing behavior of other customers who bought the same item. It looks for patterns and commonalities in their purchases. By understanding these patterns, the AI can suggest additional items that other customers frequently bought along with the first product. This helps in making personalized recommendations, encouraging customers to find more products they might be interested in, ultimately enhancing their shopping experience and increasing sales for Amazon.

Question #59. What is a Chatbot? How can they enhance customer support?

A chatbot is a computer program designed to simulate conversation with human users, especially over the Internet. They help deliver the best customer support by providing instant responses to customer inquiries, available 24/7. Chatbots can answer common questions, help troubleshoot issues, and guide customers through processes all without the need for human intervention. This means customers get quick assistance, reducing wait times and improving their overall experience. For more complex issues, chatbots can seamlessly transfer the conversation to a human representative, ensuring that customers receive the help they need.

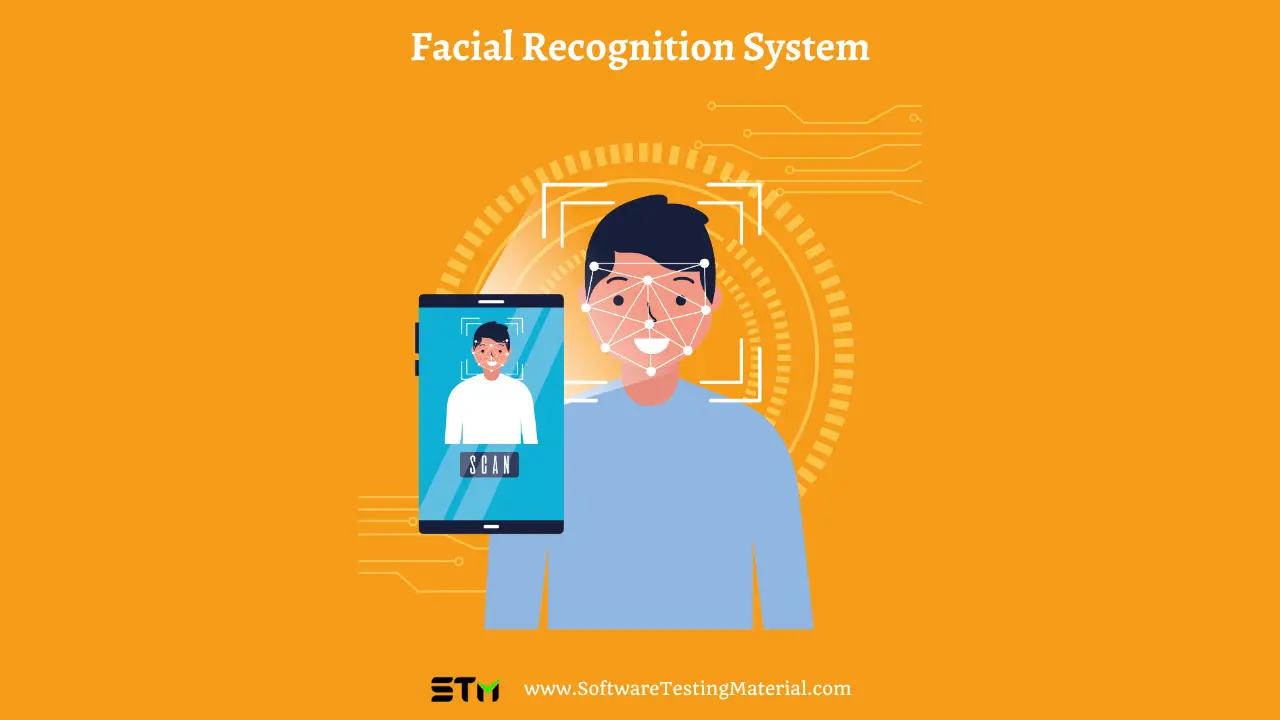

Question #60. How would you explain a face detection system to a beginner?

A face detection system works by analyzing images or videos to find and focus on human faces. To explain to a beginner, let’s start with how it uses a camera or a photo as input. The system first converts the image into a grid of pixels, each containing different light and color information. Then, it searches for patterns that look like a human face, such as the shape of eyes, nose, and mouth. It uses algorithms, which are like sets of instructions, to check if these patterns match the typical features of a face. Once a match is found, the system marks the area where the face is located. This process happens really fast, almost instantly, allowing the system to quickly recognize faces in real time or from stored images.

Related posts:

- Best Ways to Build a Career in Artificial Intelligence

- Prompt Engineering Interview Questions

- How to Ace AI Job Interviews? (10 Steps To Follow)

- How To Become A Prompt Engineer

- Prompt Engineering in Software Testing | Everything You Should Know

- Best Artificial Intelligence Software (AI Tool Reviews)

- Best AI Essay Writers for Original, Quality, Undetectable Essays

- Best AI Math Solvers for Instant Math Solutions

- Best Anti-AI Detectors and AI Detection Removers for Bypassing AI Checkers

- How to Make ChatGPT Undetectable by AI Detectors – 10 Best Ways to Try

- What is Artificial Intelligence and How Does AI Work?

- Deep Learning vs. Machine Learning: What’s the Difference?

- Weak AI vs. Strong AI | Types of Artificial Intelligence

- Best AI Test Automation Tools

- What is AI in Software Testing? A Complete Guide

- Generative AI In Software Testing | with Practical Examples

- Understanding the Integration of AI in Software Testing

- What is AI Testing? Future of Software Testing